The following blog post was originally posted in December 2008. I updated it slightly to fit current times:

This following blog post has turned into more than just a post. It’s more of a paper. In any case, in the post I am trying to capture a number of concepts that are defining the log management and analysis market (as well as the SIEM or SEM markets).

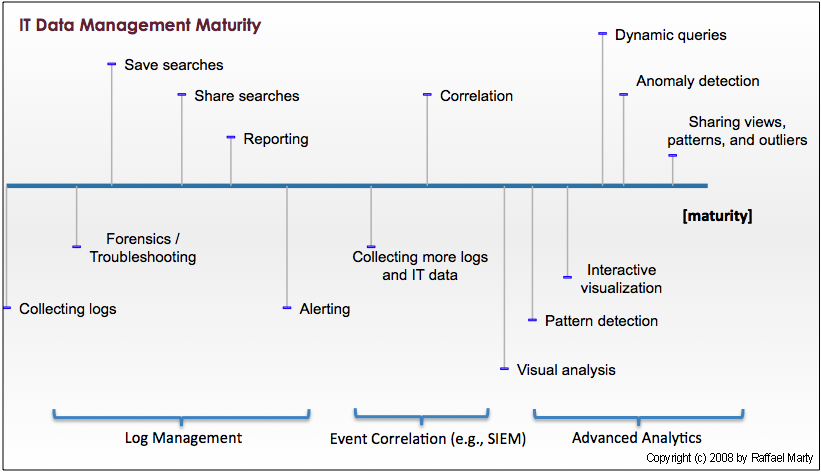

Any company or IT department/operation can be placed along the maturity scale (see Figure 1). The further on the right, the more mature the operations with regards to IT data management. A company generally moves along the scale. A movement to the right does not just involve the purchase of new solutions or tools, but also needs to come with a new set of processes. Products are often necessary but are not a must.

The further one moves to the right, the fewer companies or IT operations can be found operating at that scale. Also note that the products that companies use are called log management tools for the ones located on the left side of the scale. In the middle, it is the security information and event management (SIEM) products that are being used, and on the right side, companies have to look at either in-house tools, scripts, or in some cases commercial tools in markets other than the security market. Some SIEM tools are offering basic advanced analytics capabilities, but they are very rudimentary. The reason why there are no security specific tools and products on the right side becomes clear when we understand a bit better what the scale encodes.

Figure 1: IT Data Management Maturity Scale.

The Maturity Scale

Let us have a quick look at each of the stages on the scale. (Skip over this if you are interested in the conclusions and not the details of the scale.)

- Do nothing: I didn’t even explicitly place this stage on the scale. However, there are a great many companies out there that do exactly this. They don’t collect data at all.

- Collecting logs: At this stage of the scale, companies are collecting some data from a few data sources for retention purposes. Sometimes compliance is the driver for this. You will mostly find things like authentication logs or maybe message logs (such as email transaction logs or proxy logs). The number of different data sources is generally very small. In addition, you mostly find log files here. No more specific IT data, such as multi-line applications logs or configurations. A new trend that we are seeing here is the emergence of the cloud. A number of companies are looking to move IT services into the cloud and have them delivered by service providers. The same is happening in log management. It doesn’t make sense for small companies to operate and maintain their own logging solutions. A cloud-based offering is perfect for those situations.

- Forensics / Troubleshooting: While companies in the previous stage simply collect logs for retention purposes, companies in this stage actually make use of the data. In the security arena they are conducting forensic investigations after something suspicious was noticed or a breach was reported. In IT operations, the use-case is troubleshooting. Take email logs, for example. A user wants to know why he did not receive a specific email. Was it eaten by the SPAM filter or is something else wrong?

- Save searches: I don’t have a better name for this. In the simplest case, someone saves the search expression used with a

grepcommand. In other cases, where a log management solution is used, users are saving their searches. At this stage, analysts can re-use their searches at a later point in time to find the same type of problems again, without having to reconstruct the searches every single time. - Share searches: If a search is good for one analyst, it might be good for another one as well. Analysts at some point start sharing their ways of identifying a certain threat or analyze a specific IT problem. This greatly improves productivity.

- Reporting: Analysts need reports. They need reports to communicate findings to management. Sometimes they need reports to communicate among each other or to communicate with other teams. Generally, the reporting capabilities of log management solutions are fairly limited. They are extended in the SEM products.

- Alerting: This capability lives in somewhat of a gray-zone. Some log management solutions provide basic alerting, but generally, you will find this capability in a SEM. Alerting is used to automate some of the manual trouble-shooting that is done among companies on the left side of the scale. Instead of waiting for a user to complain that there is something wrong with his machine and then looking through the log files, analysts are setting up alerts that will notify them as soon as there are known signs of failures showing up. Things like monitoring free disk space are use-cases that are automated at this point. This can safe a lot of manual labor and help drive IT towards a more automated and pro-active discipline.

- Collecting more logs and IT data: More data means more insight, more visibility, broader coverage, and more uses. For some use-cases we now need new data sources. In some cases it’s the more exotic logs, such as multi-line application logs, instant messenger logs, or physical access logs. In addition more IT data is needed: configuration files, host status information, such as open ports or running processes, ticketing information, etc. These new data sources enable a new and broader set of use-cases, such as change validation.

- Correlation: The manual analysis of all of these new data sources can get very expensive and too resource intense. This is where SEM solutions can help automate a lot of the analysis. Uses like correlating trouble tickets with file changes, or correlating IDS data with operating system logs (Note that I didn’t say IDS and firewall logs!) There is much much more to correlation, but that’s for another blog post.

Note the big gap between the last step and this one. It takes a lot for an organization to cross this chasm. Also note that the individual mile-stones on the right side are drawn fairly close to each other. In reality, think of this as a log scale. These mile-stones can be very very far apart. The distance here is not telling anymore.

- Visual analysis: It is not very efficient to read through thousands of log messages and figure out trends or patterns, or even understand what the log entries are communicating. Visual analysis takes the textual information and packages them in an image that conveys the contents of the logs. For more information on the topic of security visualization see Applied Security Visualization.

- Pattern detection: One could view this as advanced correlation. One wants to know about patterns. Is it normal that when the DNS server is doing a zone transfer that you will also find a number of IDS alerts along with some firewall log entries? If a user browses the Web, what is the pattern of log files that are normally seen? Patter detection is the first step towards understanding an IT environment. The next step is to then figure out when something is an outlier and not part of a normal pattern. Note that this is not as simple as it sounds. There are various levels of maturity needed before this can happen. Just because something is different does not mean that it’s a “bad” anomaly or an outlier. Pattern detection engines need a lot of care and training.

- Interactive visualization: Earlier we talked about simple, static visualization to better understand our IT data. The next step in the application of visualization is interactive visualization. This type of visualization follows the principle of: “overview first, zoom and filter, then details on demand.” This type of visualization along with dynamic queries (the next step) is incredibly important for advanced analysis of IT data.

- Dynamic queries: The next step beyond interactive, single-view visualizations are multiple views of the same data. All of the views are linked together. If you select a property in one graph, the selection propagates to the others. This is also called dynamic queries. This is the gist of fast and efficient analysis of your IT data.

- Anomaly detection: Various products are trying to implement anomaly detection algorithms in order to find outliers, or anomalous behavior in the IT environment. There are many approaches that people are trying to apply. So far, however, none of them had broad success. Anomaly detection as it is known today is best understood for closed use-cases. For example, NBADs are using anomaly detection algorithms to flag interesting findings in network flows. As of today, nobody has successfully applied anomaly detection across heterogeneous data sources.

- Sharing views, patterns, and outliers: The last step on my maturity scale is the sharing of advanced analytic findings. If I know that certain versions of the Bind DNS server tend to trigger a specific set of Snort IDS alerts, it is something that others should know as well. Why not share it? Unfortunately, there are no products that allow us to share this knowledge.

While reading the maturity scale, note the gaps between the different stages. They signify how quickly after the previous step a new step sets in. If you were to look at the scale from a time-perspective, you would start an IT data management project on the left side and slowly move towards the right. Again, the gaps are fairly indicative of the relative time such a project would consume.

Related Quantities

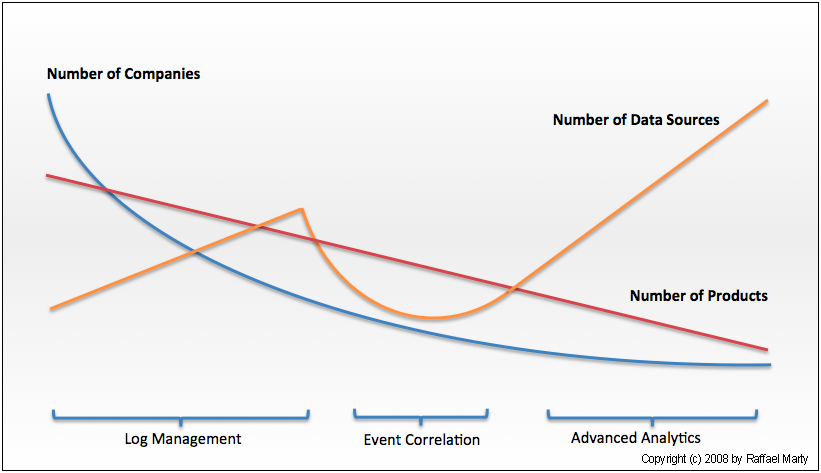

The scale could be overlaid with a lines showing some interesting, related properties. I decided to not do so in favor of legibility. Instead, have a look at Figure 2. It encodes a few properties: number of products on the market, number of customers / users, and number of data sources needed at that state of maturity.

Figure 2: The number of product, companies, and data sources tat are used / available along the maturity scale.

Why are so few products on the right side of the scale? The most obvious reason is one of market size. There are not many companies on the right side. Hence there are not many products. It is sort of a chicken and an egg problem. If there were more products, there might be more companies using them – maybe. However, there are more reasons. One of them being that in order to get to the right side, a company has to traverse the entire scale on the left. This means that the potential market for advanced analytics is the amount of companies that linger just before the advanced analytics market itself. That market is a very small one. The next question would be why there are not more companies close to the advanced analytics stage? There are multiple reasons. Some of them are:

- Not many environments manage to collect enough data to implement advanced analytics across heterogeneous data. Too many environments are stuck with just a few data sources. There are organizational, architectural, political, and technical reasons why this is so.

- A lack of qualified people (engineers, architects, etc) is another reason. Not many companies have the staff that understands how to deal with all the data collected. Not many people understand how to interpret the vast amount of different data sources.

The effects of these phenomenon play yet again into the availability of products for the advanced analytics side of the scale. Because there are not many environments that actually collect a diverse set of IT data, companies (or academia) cannot conduct research on the subject. And if they do, they mostly get it wrong or capture just a very narrow use-case.

What Else Does the Maturity Scale Tell Us?

Let us have a look at some of the other things that we can learn from/should know about the maturity scale:

- What does it mean for a company to be on the far right of the scale?

- In-depth understanding of the data

- Understanding of how to apply advanced analytics, such as visualization theory, anomaly detection, etc)

- Baseline of the behavior in the organization’s environment (needed for example for anomaly detection)

- Understanding of the context of the data gathered, such as what’s the network topology, what are the properties of the assets, etc.

- Have to employ knowledgeable people. These experts are scarce and expensive.

- Collecting all log data, which is hard!

- What are some other preconditions to live on the right side?

- A mature change management process

- Asset management

- IT infrastructure documentation

- Processes to deal with the findings/intelligence from advanced analytics

- A security policy that tells what is allowed and intended and what is not. (Have you ever put a sniffer on the network to see what traffic there is? Did you understand all of it? This is pretty much the same thing, you put a huge sniffer on your IT environment and try to explain everything. Wow!

- Understand the environment to the point where questions like: “What’s really normal?” are answered quickly. Don’t be fooled. This is nearly impossible. There are so many questions that need to be answered, such as: “Is a DNS server that generates ICMP messages every now and then an anomaly? Is it a security problem? What is the payload of the ICMP message? Maybe an information leak?”

- What’s the return on investment (ROI) for living on the right-side of the scale?

- It’s just not clear!

- Isn’t it cheaper to ignore than to discover?

- What do you intend to find and what will you find?

- So, what’s the ROI? It’s hard to measure, but you will be able to:

- Detect problems earlier

- Uncover attacks and policy violations quicker

- Prevent information leaks

- Reduce down-time of infrastructure and applications

- Reduce labor of service desk and system administration

- More stable applications

- etc. etc.

- What else?

[…] http://raffy.ch/blog/2010/06/07/maturity-scale-for-log-management-and-analysis/ […]

Pingback by Excellent Post On Maturity Scale for Log Management « The New School of Information Security — June 8, 2010 @ 5:41 am

Pretty awesome… I like this version more than 2008. In fact, more than my own even 🙂 http://chuvakin.blogspot.com/2010/02/logging-log-management-and-log-review.html

Comment by Anton Chuvakin — June 8, 2010 @ 6:25 pm

[…] posts from 2008 – with updates – and posted it this month. Here it is – http://raffy.ch/blog/2010/06/07/maturity-scale-for-log-management-and-analysis/ – a focus on the maturity scale for log […]

Pingback by Log Management Year in Review… or Eyes in the Back of Your Head. | Log Management Central — December 16, 2010 @ 11:25 am

[…] also dug out the log maturity scale again. After mentioning it at the SANS logging summit, I got a lot of great responses on […]

Pingback by » Mid January Roundup — January 17, 2011 @ 9:12 pm

[…] and operations (criteria for it). On my log/SIEM maturity scale (presented here, also see this related post from Raffy), they are either in the ignorance phase or maybe log collection […]

Pingback by On Broken SIEM Deployments — August 2, 2011 @ 8:24 pm