March 31, 2021

Asset management is one of the core components of many successful security programs. I am an advisor to Panaseer, a startup in the continuous compliance management space. I recently co-authored a blog post on my favorite security metric that is related to asset management:

How many assets are in the environment?

A simple number. A number that tells a complex story though if collected over time. A metric also that has a vast number of derivatives that are important to understand and one that has its challenges to be collected correctly. Just think about how you’d know how many assets there are at every moment in time? How do you collect that information in real-time?

The metric is also great to start with to then break it down along additional dimensions. For example:

- How many assets are managed versus unmanaged (e.g., IOT devices)

- Who are the owners of the assets and how many assets can we assign an owner for?

- What does the metric look like broken down by operating system, by business unit, by department, by assets that have control violations, etc.

- Where is the asset located?

- Who is using the asset?

And then, as with any metric, we can look at the metrics not just as a single instance in time, but we can put them into context and learn more about our asset landscape:

- How does the number behave over time? Any trends or seasonalities?

- Can we learn the uncertainty associated with the metric itself? Or in other terms, what’s the error range?

- Can we predict the asset landscape into the future?

- Are there certain behavioral patterns around when we see the assets on the network?

I am just scratching the surface of this metric. Read the full blog post to learn more and explore how continuous compliance monitoring can help you get your IT environment under control.

August 2, 2019

In my last blog post I highlighted some challenges with a research approach from a paper that was published at IEEE S&P, the sub conference on “Deep Learning and Security Workshop (DLS 2019)“. The same conference featured another paper that spiked my interest: Exploring Adversarial Examples in Malware Detection.

This paper highlights the problem of needing domain experts to build machine learning approaches for security. You cannot rely on pure data scientists without a solid security background or at least a very solid understanding of the domain, to build solutions. What a breath of fresh air. I hole heartedly agree with this. But let’s look at how the authors went about their work.

The example that is used in the paper is in the area of malware detection; a problem that is a couple of decades old. The authors looked at binaries as byte streams and initially argued that we might be able to get away without feature engineering by just feeding the byte sequences into a deep learning classifier – which is one of the premises of deep learning, not having to define features for it to operate. The authors then looked at some adversarial scenarios that would circumvent their approach. (Side bar: I wish Cylance had read this paper a couple years ago). The paper goes through some ROC curves and arguments to end up with some lessons learned:

- Training sets matter when testing robustness against adversarial examples

- Architectural decisions should consider effects of adversarial examples

- Semantics is important for improving effectiveness [meaning that instead of just pushing a binary stream into the deep learner, carefully crafting features is going to increase the efficacy of the algorithm]

Please tell me which of these three are non obvious? I don’t know that we can set the bar any lower for security data science.

I want to specifically highlight the last point. You might argue that’s the one statement that’s not obvious. The authors basically found that, instead of feeding simple byte sequences into a classifier, there is a lift in precision if you feed additional, higher-level features. Anyone who has looked at byte code before or knows a little about assembly should know that you can achieve the same program flow in many ways. We must stop comparing security problems to image or speech recognition. Binary files, executables, are not independent sequences of bytes. There is program flow, different ‘segments’, dynamic changes, etc.

We should look to other disciplines (like image recognition) for inspiration, but we need different approaches in security. Get inspiration from other fields, but understand the nuances and differences in cyber security. We need to add security experts to our data science teams!

July 30, 2019

Over the weekend I was catching up on some reading and came about the “Deep Learning and Security Workshop (DLS 2019)“. With great interest I browsed through the agenda and read some of the papers / talks, just to find myself quite disappointed.

It seems like not much has changed since I launched this blog. In 2005, I found myself constantly disappointed with security articles and decided to outline my frustrations on this blog. That was the very initial focus of this blog. Over time it morphed more into a platform to talk about security visualization and then artificial intelligence. Today I am coming back to some of the early work of providing, hopefully constructive, feedback to some of the work out there.

The researcher paper I am looking at is about building a deep learning based malware classifier. I won’t comment on the fact that every AV company has been doing this for awhile (but learned from their early mistakes of not engineering ‘intelligent’ features). I also won’t discuss the machine learning architecture that is introduced. What I will argue is the approach that was taken and the conclusions that were drawn:

- The paper uses a data set that has no ground truth. Which, in network security is very normal. But it needs to be taken into account. Any conclusion that is made is only relative to the traffic that the algorithm was tested, at the time of testing and under the used configuration (IDS signatures). The paper doesn’t discuss adoption or changes over time. It’s a bias that needs to be clearly taken into account.

- The paper uses a supervised approach leveraging a deep learner. One of the consequences is that this system will have a hard time detecting zero days. It will have to be retrained regularly. Interestingly enough, we are in the same world as the anti virus industry when they do binary classification.

- Next issue. How do we know what the system actually captures and what it does not?

- This is where my recent rants on ‘measuring the efficacy‘ of ML algorithms comes into play. How do you measure the false negative rates of your algorithms in a real-world setting? And even worse, how do you guarantee those rates in the future?

- If we don’t know what the system can detect (true positives), how can we make any comparative statements between algorithms? We can make a statement about this very setup and this very data set that was used, but again, we’d have to quantify the biases better.

- In contrast to the supervised approach, the domain expert approach has a non-zero chance of finding future zero days due to the characterization of bad ‘behavior’. That isn’t discussed in the paper, but is a crucial fact.

- The paper claims a 97% detection rate with a false positive rate of less than 1% for the domain expert approach. But that’s with domain expert “Joe”. What about if I wrote the domain knowledge? Wouldn’t that completely skew the system? You have to somehow characterize the domain knowledge. Or quantify its accuracy. How would you do that?

Especially the last two points make the paper almost irrelevant. The fact that this wasn’t validated in a larger, real-world environment is another fallacy I keep seeing in research papers. Who says this environment was representative of every environment? Overall, I think this research is dangerous and is actually portraying wrong information. We cannot make a statement that deep learning is better than domain knowledge. The numbers for detection rates are dangerous and biased, but the bias isn’t discussed in the paper.

:q!

July 24, 2019

Before even diving into the topic of Causality Research, I need to clarify my use of the term #AI. I am getting sloppy in my definitions and am using AI like everyone else is using it, as a synonym for analytics. In the following, I’ll even use it as a synonym for supervised machine learning. Excuse my sloppiness …

Causality Research is a topic that has emerged from the shortcomings of supervised machine learning (SML) approaches. You train an algorithm with training data and it learns certain properties of that data to make decisions. For some problems that works really well and we don’t even care about what exactly the algorithm has learned. But in certain cases, we really would like to know what the system just learned. Your self-driving car, for example. Wouldn’t it be nice if we actually knew how the car makes decisions? Not just for our own peace of mind, but also to enable verifyability and testing.

Here are some thoughts about what is happening in the area of causality for AI:

- This topic is drawing attention because people are having their blinders on when defining what AI is. AI is more than supervised machine learning, and a number of the algorithms in the field, like belief networks, are beautifully explainable.

- We need to get away from using specific algorithms as the focal point of our approaches. We need to look at the problem itself and determine what the right solution to the problem is. Some of the very old methods like belief networks (I sound like a broken record) are fabulous and have deep explainability. In the grand scheme of things, only few problems require supervised machine learning.

- We are finding ourselves in a world where some people believe that data can explain everything. It cannot. History is not a predictor of the future. Even in experimental physics, we are getting to our limits and have to start understanding the fundamentals to get to explainability. We need to build systems that help experts encode their knowledge and augments human cognition by automating tasks that machines are good at.

The recent Cylance faux pas is a great example why supervised machine learning and AI can be really really dangerous. And it brings up a different topic that we need to start exploring more, which is how we measure the efficacy or precision of AI algorithms. How do we assess the things a given AI or machine learning approach misses and what are the things it classifies wrong? How does one compute these metrics for AI algorithms? How do we determine whether one algorithm is better than another. For example, the algorithm that drives your car. How do you know how good it is? Does a software update make it better? How much? That’s a huge problem in AI and ‘causality research’ might be able to help develop methods to quantify efficacy.

August 7, 2018

Join me for my talk about AI and ML in cyber security at BlackHat on Thursday the 9th of August in Las Vegas. I’ll be exploring the topics of artificial intelligence (AI) and machine learning (ML) to show some of the ‘dangerous’ mistakes that the industry (vendors and practitioners alike) are making in applying these concepts in security.

We don’t have artificial intelligence (yet). Machine learning is not the answer to your security problems. And downloading the ‘random’ analytic library to identify security anomalies is going to do you more harm than it helps.

We will explore these accusations and walk away with the following learnings from the talk:

I am exploring these items throughout three sections in my talk: 1) A very quick set of definitions for machine learning, artificial intelligence, and data mining with a few examples of where ML has worked really well in cyber security. Check cybersecuritycourses.com here for an overview of the best cyber security courses available. 2) A closer and more technical view on why algorithms are dangerous. Why it is not a solution to download a library from the Internet to find security anomalies in your data. 3) An example scenario where we talk through supervised and unsupervised machine learning for network traffic analysis to show the difficulties with those approaches and finally explore a concept called belief networks that bear a lot of promise to enhance our detection capabilities in security by leveraging export knowledge more closely. And if you plan to test the the vulnerability of your network, make use of Wifi Pineapple testing tool.

I keep mentioning that algorithms are dangerous. Dangerous in the sense that they might give you a false sense of security or in the worst case even decrease your security quite significantly. Here are some questions you can use to self-assess whether you are ready and ‘qualified’ to use data science or ‘advanced’ algorithms like machine learning or clustering to find anomalies in your data:

- Do you know what the difference is between supervised and unsupervised machine learning?

- Can you describe what a distance function is?

- In data science we often look at two types of data: categorical and numerical. What are port numbers? What are user names? And what are IP sequence numbers?

- In your data set you see traffic from port 0. Can you explain that?

- You see traffic from port 80. What’s a likely explanation of that? Bonus points if you can come up with two answers.

- How do you go about selecting a clustering algorithm?

- What’s the explainability problem in deep learning?

- How do you acquire labeled network data sets (netflows or pcaps)?

- Name three data cleanliness problems that you need to account for before running any algorithms?

- When running k-means, do you have to normalize your numerical inputs?

- Does k-means support categorical features?

- What is the difference between a feature, data field, and a log record?

If you can’t answer the above questions, you might want to rethink your data science aspirations and come to my talk on Thursday to hopefully walk away with answers to the above questions.

Update 8/13/18: Added presentation slides

March 29, 2018

Another year, another Security Analytics Summit. This year Kaspersky gathered an amazing set of speakers in Cancun, Mexico. I presented on AI & ML in Cyber Security – Why Algorithms Are Dangerous. I was really pleased how well the talk was received and it was super fun to see the storm that emerged on Twitter where people started discussing AI and ML.

Another year, another Security Analytics Summit. This year Kaspersky gathered an amazing set of speakers in Cancun, Mexico. I presented on AI & ML in Cyber Security – Why Algorithms Are Dangerous. I was really pleased how well the talk was received and it was super fun to see the storm that emerged on Twitter where people started discussing AI and ML.

Here are a couple of tweets that attendees of my talk tweeted out (thanks everyone!):

The following are some more impressions from the conference:

And here are the slides:

January 14, 2018

I have been talking about artificial intelligence (AI) and machine learning (ML) in cyber security quite a bit lately. My latest two essays you can find as guest posts on TowardsDataScience and DarkReading.

Following is a summary of the latest AI and ML posts with quick summaries:

I’d love to hear your comments – be that on twitter or as comments on the posts!

December 15, 2017

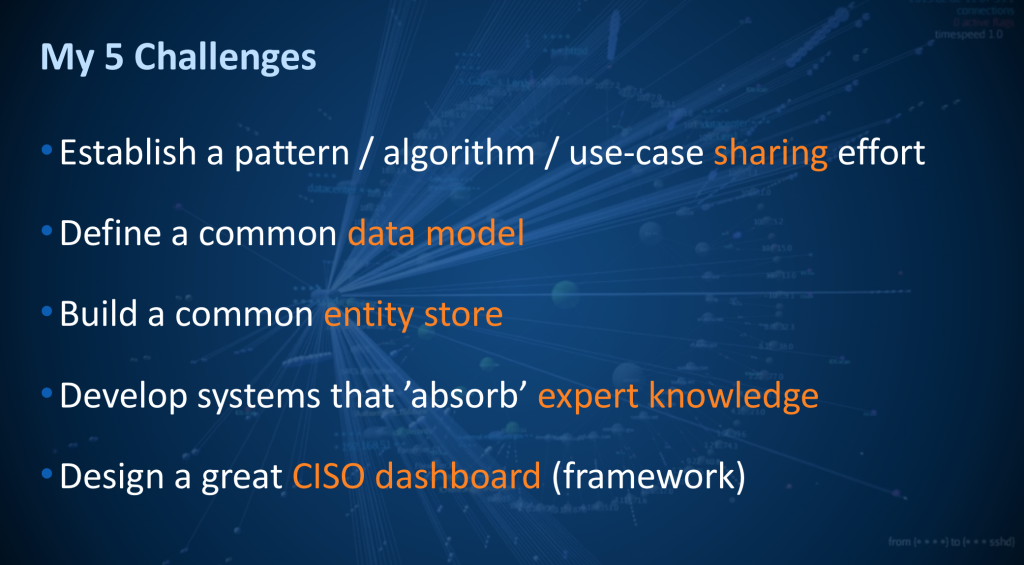

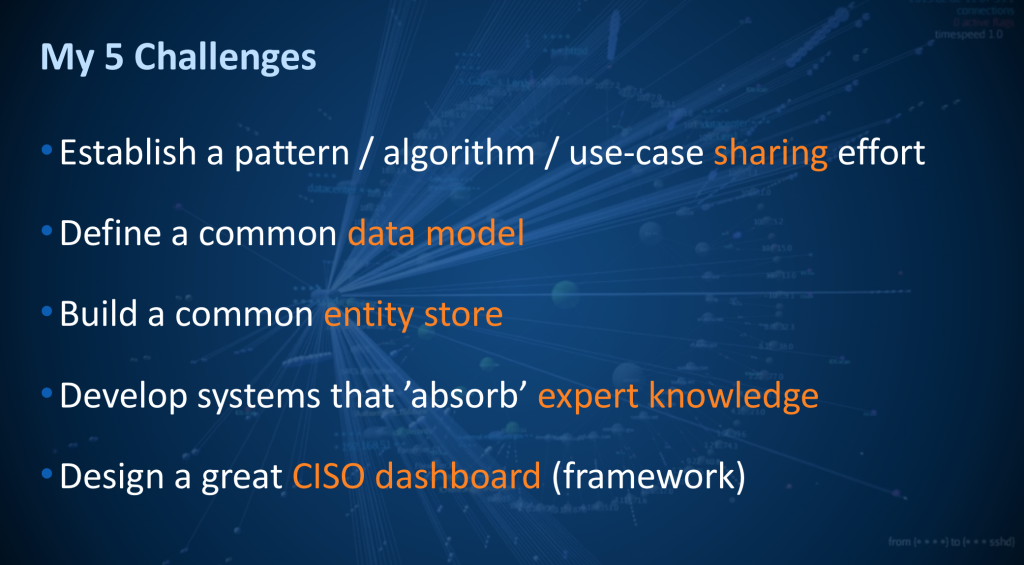

Previously, I started blogging about individual topics and slides from my keynote at ACSAC 2017. The first topic I elaborated on a little bit was An Incomplete Security Big Data History. In this post I want to focus on the last slide in the presentation, where I posed 5 Challenges for security with big data:

Let me explain and go into details on these challenges a bit more:

- Establish a pattern / algorithm / use-case sharing effort: Part of the STIX standard for exchanging threat intelligence is the capability to exchange patterns. However, we have been notoriously bad at actually doing that. We are exchanging simple indicators of compromise (IOCs), such as IP addresses or domain names. But talk to any company that is using those, and they’ll tell you that those indicators are mostly useless. We have to up-level our detections and engage in patterns; also called TTPs at times: tactics, techniques, and procedures. Those characterize attacker behavior, rather than calling out individual technical details of the attack. Back in the good old days of SIM, we built correlation rules (we actually still do). Problem is that we don’t share them. The default content delivered by the SIMs is horrible (I can say that. I built all of those for ArcSight back in the day). We don’t have a place where we can share our learnings. Every SIEM vendor is trying to do that on their own, but we need to start defining those patterns independent of products. Let’s get going! Who makes the first step?

- Define a common data model: For over a decade, we have been trying to standardize log formats. And we are still struggling. I initially wrote the Common Event Format (CEF) at ArcSight. Then I went to Mitre and tried to get the common event expression (CEE) work off the ground to define a vendor neutral standard. Unfortunately, getting agreement between Microsoft, RedHat, Cisco, and all the log management vendors wasn’t easy and we lost the air force funding for the project. In the meantime I went to work for Splunk and started the common information model (CIM). Then came Apache Spot, which has defined yet another standard (yes, I had my fingers in that one too). So the reality is, we have 4 pseudo standards, and none is really what I want. I just redid some major parts over here at Sophos (I hope I can release that at some point).

Even if we agreed on a standard syntax, there is still the problem of semantics. How do you know something is a login event? At ArcSight (and other SIEM vendors) that’s called the taxonomy or the categorization. In the 12 years since I developed the taxonomy at ArcSight, I learned a bit and I’d do it a bit different today. Well, again, we need standards that products implement. Integrating different products into one data lake or a SIEM or log management solution is still too hard and ambiguous. But you can learn doing this if you will look for Fortinet and learn how they do this.

- Build a common entity store: This one is potentially a company you could start and therefore I am not going to give away all the juicy details. But look at cyber security. We need more context for the data we are collecting. Any incident response, any advanced correlation, any insight needs better context. What’s the user that was logged into a system? What’s the role of that system? Who owns it, etc. All those factors are important. Cyber security has an entity problem! How do you collect all that information? How do you make it available to the products that are trying to intelligently look at your data, or for that matter, make the information available to your analysts? First you have to collect the data. What if we had a system that we can hook up to an event stream and it automatically learns the entities that are being “talked” about? Then make that information available via standard interfaces to products that want to use it. There is some money to be made here! Oh, and guess what! By doing this, we can actually build it with privacy in mind. Anonymization built in! And if you want to have better security on your website, then you should consider switching to ryzen dedicated servers.

- Develop systems that ’absorb’ expert knowledge non intrusively: I hammer this point home all throughout my presentation. We need to build systems that absorb expert knowledge. How can we do that without being too intrusive? How do we build systems with expert knowledge? This can be through feedback loops in products, through bayesian belief networks, through simple statistics or rules, … but let’s shift our attention to knowledge and how we make experts by CCTV Melbourne and highly paid security people more efficient.

- Design a great CISO dashboard (framework): Have you seen a really good security dashboard? I’d love to see it (post in the comments?). It doesn’t necessarily have to be for a CISO. Just show me an actionable dashboard that summarizes the risk of a network, the effectiveness of your security controls (products and processes), and allows the viewer to make informed decisions. I know, I am being super vague here. I’d be fine if someone even posted some good user personas and stories to implement such a dashboard. (If you wait long enough, I’ll do it). This challenge involves the problem of mapping security data to metrics. Something we have been discussing for eons. It’s hard. What’s a 10 versus a 5 when it comes to your security posture? Any (shared) progress on this front would help.

What are your thoughts? What challenges would you put out? Am I missing the mark? Or would you share my challenges?

October 22, 2017

After my latest blog post on “Machine Learning and AI – What’s the Scoop for Security Monitoring?“, there was a quick discussion on twitter and Shomiron made a good point that in my post I solely focused on supervised machine learning.

In simple terms, as mentioned in the previous blog post, supervised machine learning is about learning with a training data set. In contrast, unsupervised machine learning is about finding or describing hidden structures in data. If you have heard of clustering algorithms, they are one of the main groups of algorithms in unsupervised machine learning (the other being association rule learning).

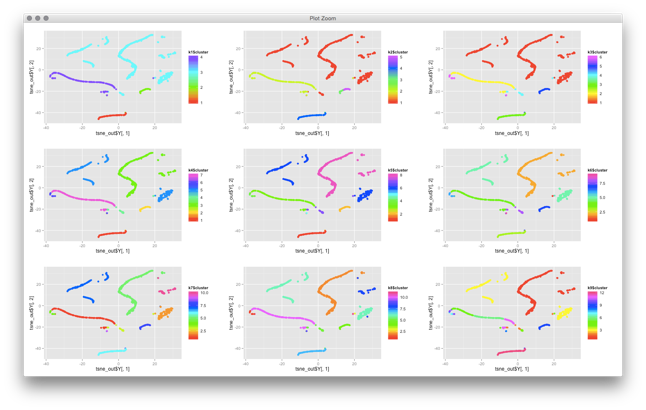

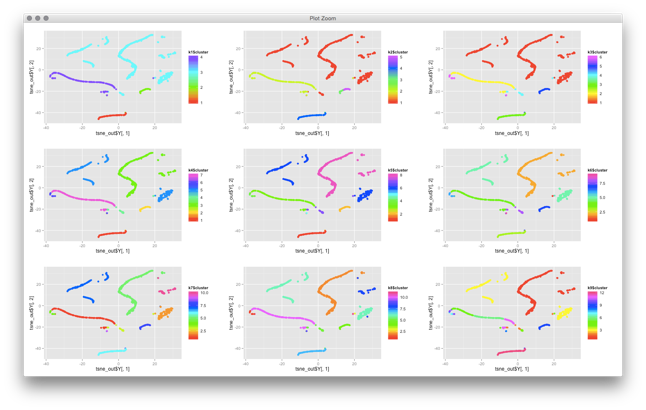

There are some quite technical problems with applying clustering to cyber security data. I won’t go into details for now. The problems are related to defining distance functions and the fact that most, if not all, clustering algorithms have been built around numerical and not categorical data. Turns out, in cyber security, we mostly deal with categorical data (urls, usernames, IPs, ports, etc.). But outside of these mathematical problems, there are other problems you face with clustering algorithms. Have a look at this visualization of clusters that I created from some network traffic:

Some of the network traffic clusters incredibly well. But now what? What does this all show us? You can basically do two things with this:

- You can try to identify what each of these clusters represent. But the explainability of clusters is not built into clustering algorithms! You don’t know why something shows up on the top right, do you? You have to somehow figure out what this traffic is. You could run some automatic feature extraction or figure out what the common features are, but that’s generall not very easy. It’s not like email traffic will nicely cluster on the top right and Web traffic on the bottom right.

- You may use this snapshot as a baseline. In fact, in the graph you see individual machines. They cluster based on their similarity of network traffic seen (with given distance functions!). If you re-run the same algorithm at a later point in time, you could try to see which machines still cluster together and which ones do not. Sort of using multiple cluster snapshots as anomaly detectors. But note also that these visualizations are generally not ‘stable’. What was on top right might end up on the bottom left when you run the algorithm again. Not making your analysis any easier.

If you are trying to implement case number one above, you can make it a bit little less generic. You can try to inject some a priori knowledge about what you are looking for. For example, BotMiner / BotHunter uses an approach to separate botnet traffic from regular activity.

These are some of my thoughts on unsupervised machine learning in security. What are your use-cases for unsupervised machine learning in security?

PS: If you are doing work on clustering, have a look at t-SNE. It’s a clustering algorithm that does a multi-dimensional projection of your data into the 2-dimensional space. I have gotten incredible results with it. I’d love to hear from you if you have used the algorithm.

October 13, 2017

The other day I presented a Webinar on Big Data and SIEM for IANS research. One of the topics I briefly touched upon was machine learning and artificial intelligence, which resulted in a couple of questions after the Webinar was over. I wanted to pass along my answers here:

Q: Hi, one of the biggest challenges we have is that we have all the data and logs as part of SIEM, but how to effectively and timely review it – distinguishing ‘information’ from ‘noise’. Is Artificial Intelligence (AI) is the answer for it?

A: AI is an overloaded term. When people talk about AI, they really mean machine learning. Let’s therefore have a look at machine learning (ML). For ML you need sample data; labeled data, which means that you need a data set where you already classified things into “information” and “noise”. Form that, machine learning will learn the characteristics of ‘noisy’ stuff. The problem is getting a good, labeled data set; which is almost impossible. Given that, what else could help? Well, we need a way to characterize or capture the knowledge of experts. That is quite hard and many companies have tried. There is a company, “Respond Software”, which developed a method to run domain experts through a set of scenarios that they have to ‘rate’. Based on that input, they then build a statistical model which distinguishes ‘information’ from ‘noise’. Coming back to the original question, there are methods and algorithms out there, but the thing to look for are systems that capture expert knowledge in a ‘scalable’ way; in a way that generalizes knowledge and doesn’t require constant re-learning.

Q: Can SIEMs create and maintain baselines using historical logs to help detect statistical anomalies against the baseline?

A: The hardest part about anomalies is that you have to first define what ‘normal’ is. A SIEM can help build up a statistical baseline. In ArcSight, for example, that’s called a moving average data monitor. However, a statistical outlier is not always a security problem. Just because I suddenly download a large file doesn’t mean I am compromised. The question then becomes, how do you separate my ‘download’ from a malicious download? You could show all statistical outliers to an analyst, but that’s a lot of false positives they’d have to deal with. If you can find a way to combine additional signals with those statistical indicators, that could be a good approach. Or combine multiple statistical signals. Be prepared for a decent amount of caring and feeding of such a system though! These indicators change over time.

Q: Have you seen any successful applications of Deep Learning in UEBA/Hunting?

A: I have not. Deep learning is just a modern machine learning algorithm that suffers from all most of the problems that machine learning suffers from as well. To start with, you need large amounts of training data. Deep learning, just like any other machine learning algorithm, also suffers from explainability. Meaning that the algorithm might classify something as bad, but you will never know why it did that. If you can’t explain a detection, how do you verify it? Or how do you make sure it’s a true positive?

Hunting requires people. Focus on enabling hunters. Focus on tools that automate as much as possible in the hunting process. Giving hunters as much context as possible, fast data access, fast analytics, etc. You are trying to make the hunters’ jobs easier. This is easier said than done. Such tools don’t really exist out of the box. To get a start though, don’t boil the ocean. You don’t even need a fully staffed hunting team. Have each analyst spend an afternoon a week on hunting. Let them explore your environment. Let them dig into the logs and events. Let them follow up on hunches they have. You will find a ton of misconfigurations in the beginning and the analysts will come up with many more questions than answers, but you will find that through all the exploratory work, you get smarter about your infrastructure. You get better at documenting processes and findings, the analysts will probably automate a bunch of things, and not to forget: this is fun. Your analysts will come to work re-energized and excited about what they do.

Q: What are some of the best tools used for tying the endpoint products into SIEMs?

A: On Windows I can recommend using sysmon as a data source. On Linux it’s a bit harder, but there are tools that can hook into the audit capability or in newer kernels, eBPF is a great facility to tap into.

If you have an existing endpoint product, you have to work with the vendor to make sure they have some kind of a central console that manages all the endpoints. You want to integrate with that central console to forward the event data from there to your SIEM. You do not want to get into the game of gathering endpoint data directly. The amount of work required can be quite significant. How, for example, do you make sure that you are getting data from all endpoints? What if an endpoint goes offline? How do you track that?

When you are integrating the data, it also matters how you correlate the data to your network data and what correlations you set up around your endpoint data. Work with your endpoint teams to brainstorm around use-cases and leverage a ‘hunting’ approach to explore the data to learn the baseline and then set up triggers from there.

Update: Check out my blog post on Unsupervised machine learning as a follow up to this post.