Hunting is about learning about, and understand your environment. It is used to build a ‘model’ of your network and applications that you then leverage to configure and tune your detection mechanisms.

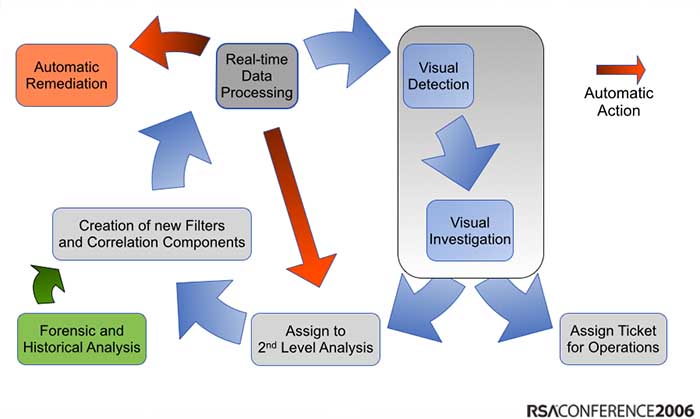

I have been preaching about the need to explore our security data for the past 9 or 10 years. I just went back in my slides and I even posted a process diagram about how to integrate exploratory analysis into the threat detection life cycle (sorry for the horrible graph, but back then I had to use PowerPoint 🙂 ):

Back then, people liked the visualizations they saw in my presentations, but nobody took the process seriously. They felt that the visualizations were pretty, but not that useful. Fast forward 7 or 8 years: Everyone is talking about hunting and how they need better tools an methods to explore their data. Suddenly those visualizations from back then are not just pretty, but people start seeing how they are actually really useful.

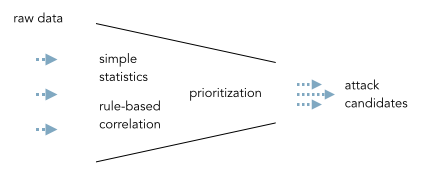

The core problem with threat detection is a broken process. Back in the glory days of SIEM, experts (or shall we say engineers) sat in a lab cooking up correlation rules and event prioritization formulas to help implement an event funnel:

This is so incredibly broken. [What do I know about this? I ran the team that did this for a big SIEM.] Not every environment is the same. There is no one formula that will help prioritize events. The correlation rules only scratch the surface, etc. etc. The fallout from this is that dozens of companies are frustrated with their SIEMs. They are looking for alternatives; throw them out and build their own. [Please don’t be one of them!]

Then threat intelligence came about. Instead of relying on signatures that identify common threats, let’s look for adversaries as well. Has that worked? Nope. There is a bunch of research indicating that the ‘external’ threat intelligence we have is not good.

What should we do? Well, we have to get away from thinking that a product will solve our problems. We have to roll-up our own sleeves and start creating our own ‘internal’ threat intelligence – through hunting.

The Hunting Process

Another word for hunting is exploring or learning. We need to understand our infrastructure, our applications to then find when there are things happening that are out of the norm.

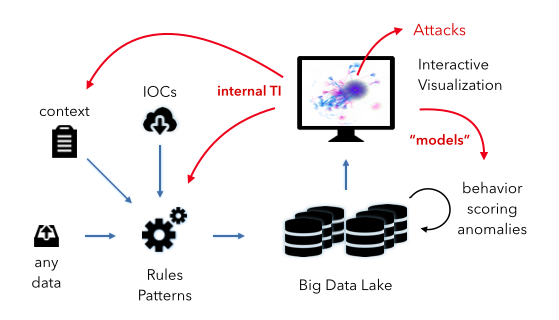

Let’s look at the diagram above. What you see is a very simple data pipeline that we find everywhere. We ingest any kind of data. We then apply some kind of intelligence to the data to flag relevant events. In the SIEM case, the intelligence are mostly the correlation rules and the prioritization formula. [We have discussed this: pure voodoo.] Let’s have a look at the other pieces in the diagram:

- Context: This is any additional information we can gather about our objects (machines, users, and applications). This information is crucial for good intelligence. The more surprising it is that the SIEMs haven’t put much attention to features around this.

- IOCs: This is any kind of external threat intelligence. (see discussion above) We can use the IOCs to flag potentially bad activity.

- Interactive Visualization: This is really the heart of the hunting process. This is the interface that a hunter uses to explore and learn about the data. Flexibility, speed, and an amazing user experience are key.

- Internal TI: This is the internal threat intelligence that I mentioned above. This is basically anything we learn about our environment from the hunting process. The information is fed back into the overall system in the form of context and rules or patterns.

- Models: The other kind of intelligence or knowledge we gather from our hunting activities we can use to optimize and define new models for behavioral analysis, scoring, and finding anomalies. I know, this is kind of vague, but we could spend an entire blog post on just this. Ask if you wanna know more.

To summarize, the hunting process really helps us learn about our environment. It teaches us what is “normal” and how to find “anomalies”. More practically, it informs the rules we write, the patterns we try to find, the behavioral models we build. Without going through this process, we won’t be able to configure / define a system that has a fighting chance of finding advanced adversaries or attackers in our network. Non. Zero. Zilch.

Some more context and elaboration on some of the hunting concepts you can find in my recent darkreading blog post about Threat Intelligence.