July 11, 2013

This is a slide I built for my Visual Analytics Workshop at BlackHat this year. I tried to summarize all the SIEM and log management vendors out there. I am pretty sure I missed some players. What did I miss? I’ll try to add them before the training.

Enjoy!

Here is the list of vendors that are on the slide (in no particular order):

Log Management

- Tibco

- KeyW

- Tripwire

- Splunk

- Balabit

- Tier-3 Systems

SIEM

- HP

- Symantec

- Tenable

- Alienvault

- Solarwinds

- Attachmate

- eIQ

- EventTracker

- BlackStratus

- TrustWave

- LogRhythm

- ClickSecurity

- IBM

- McAfee

- NetIQ

- RSA

- Event Sentry

Logging as a Service

- SumoLogic

- Loggly

- PaperTrail

- Torch

- AlertLogic

- SplunkStorm

- logentries

- eGestalt

Update: With input from a couple of folks, I updated the slide a couple of times.

December 8, 2011

At the beginning of this week, I spent some time with a number of interesting folks talking about cyber security visualization. It was a diverse set of people from the DoD, the X Prize foundation, game designers, and even an astronaut. We all discussed what it would mean if we launched a grand challenge to improve cyber situational awareness. Something like the Lunar XPrize that is a challenge where teams have to build a robot and successfully send it to the moon.

There were a number of interesting proposals that came to the table. On a lot of them I had to bring things back down to reality every now and then. These people are not domain experts in cyber security, so you might imagine what kind of ideas they suggested. But it was fun to be challenged and to hear all these crazy ideas. Definitely expanded my horizon and stretched my imagination.

What I found interesting is that pretty much everybody gravitated towards a game-like challenge. All the way to having a game simulator for cyber security situational awareness.

Anyways, we’ll see whether the DoD is actually going to carry through with this. I sure hope so, it would help the secviz field enormously and spur interesting development, as well as extend and revitalize the secviz community!

Here is the presentation about situational awareness that I gave on the first day. I talked very briefly about what situational awareness is, where we are today, what the challenges are, and where we should be moving to.

November 8, 2010

It’s time for a quick re-hash of recent publications and happenings in my little logging world.

- First and foremost, Loggly is growing and we have around 70 users on our private beta. If you are interested in testing it out, signup online and email or tweet me.

- I recorded two pod casts lately. The first one was around Logging As A Service. Check out my blog post over on Loggly’s blog to get the details.

- The second pod cast I recorded last week on the topic of business justification for logging. This is part of Anton Chuvakin’s LogCast series.

- I have been writing a little lately. I got three academic papers accepted at conferences. The one I am most excited about is the Cloud Application Logging for Forensics one. It is really applicable to any application logging effort. If you are developing an application, you should have a look at this. It talks about logging guidelines, a logging architecture and gives a bunch of very specific tips on how to go about logging. The other two papers are on insider threat and visualization: “Visualizing the Malicious Insider Threat”

- I will have some new logging and visualization related resources available soon. I am going to be speaking at a number of conferences in the next month: Congreso Seguridad en Computo 2010 in Mexico City, DeepSec 2010 in Vienna, and the SANS WhatWorks in Incident Detection and Log Management Summit 2010 in D.C.

See you next time.

June 7, 2010

The following blog post was originally posted in December 2008. I updated it slightly to fit current times:

This following blog post has turned into more than just a post. It’s more of a paper. In any case, in the post I am trying to capture a number of concepts that are defining the log management and analysis market (as well as the SIEM or SEM markets).

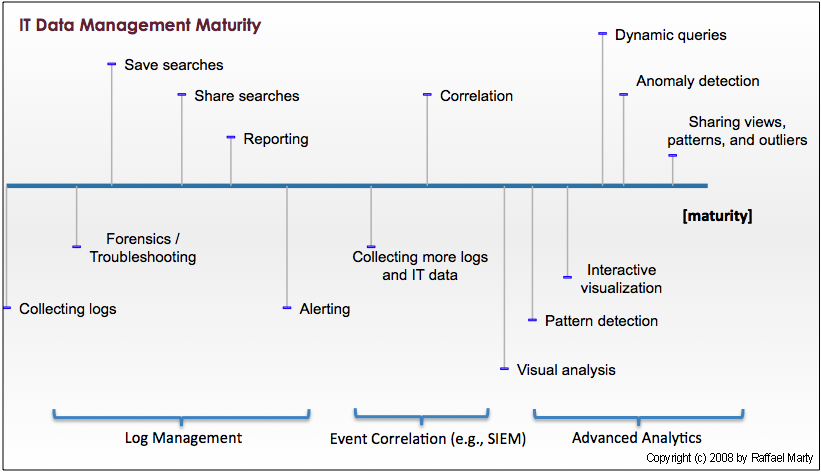

Any company or IT department/operation can be placed along the maturity scale (see Figure 1). The further on the right, the more mature the operations with regards to IT data management. A company generally moves along the scale. A movement to the right does not just involve the purchase of new solutions or tools, but also needs to come with a new set of processes. Products are often necessary but are not a must.

The further one moves to the right, the fewer companies or IT operations can be found operating at that scale. Also note that the products that companies use are called log management tools for the ones located on the left side of the scale. In the middle, it is the security information and event management (SIEM) products that are being used, and on the right side, companies have to look at either in-house tools, scripts, or in some cases commercial tools in markets other than the security market. Some SIEM tools are offering basic advanced analytics capabilities, but they are very rudimentary. The reason why there are no security specific tools and products on the right side becomes clear when we understand a bit better what the scale encodes.

Figure 1: IT Data Management Maturity Scale.

The Maturity Scale

Let us have a quick look at each of the stages on the scale. (Skip over this if you are interested in the conclusions and not the details of the scale.)

- Do nothing: I didn’t even explicitly place this stage on the scale. However, there are a great many companies out there that do exactly this. They don’t collect data at all.

- Collecting logs: At this stage of the scale, companies are collecting some data from a few data sources for retention purposes. Sometimes compliance is the driver for this. You will mostly find things like authentication logs or maybe message logs (such as email transaction logs or proxy logs). The number of different data sources is generally very small. In addition, you mostly find log files here. No more specific IT data, such as multi-line applications logs or configurations. A new trend that we are seeing here is the emergence of the cloud. A number of companies are looking to move IT services into the cloud and have them delivered by service providers. The same is happening in log management. It doesn’t make sense for small companies to operate and maintain their own logging solutions. A cloud-based offering is perfect for those situations.

- Forensics / Troubleshooting: While companies in the previous stage simply collect logs for retention purposes, companies in this stage actually make use of the data. In the security arena they are conducting forensic investigations after something suspicious was noticed or a breach was reported. In IT operations, the use-case is troubleshooting. Take email logs, for example. A user wants to know why he did not receive a specific email. Was it eaten by the SPAM filter or is something else wrong?

- Save searches: I don’t have a better name for this. In the simplest case, someone saves the search expression used with a

grep command. In other cases, where a log management solution is used, users are saving their searches. At this stage, analysts can re-use their searches at a later point in time to find the same type of problems again, without having to reconstruct the searches every single time.

- Share searches: If a search is good for one analyst, it might be good for another one as well. Analysts at some point start sharing their ways of identifying a certain threat or analyze a specific IT problem. This greatly improves productivity.

- Reporting: Analysts need reports. They need reports to communicate findings to management. Sometimes they need reports to communicate among each other or to communicate with other teams. Generally, the reporting capabilities of log management solutions are fairly limited. They are extended in the SEM products.

- Alerting: This capability lives in somewhat of a gray-zone. Some log management solutions provide basic alerting, but generally, you will find this capability in a SEM. Alerting is used to automate some of the manual trouble-shooting that is done among companies on the left side of the scale. Instead of waiting for a user to complain that there is something wrong with his machine and then looking through the log files, analysts are setting up alerts that will notify them as soon as there are known signs of failures showing up. Things like monitoring free disk space are use-cases that are automated at this point. This can safe a lot of manual labor and help drive IT towards a more automated and pro-active discipline.

- Collecting more logs and IT data: More data means more insight, more visibility, broader coverage, and more uses. For some use-cases we now need new data sources. In some cases it’s the more exotic logs, such as multi-line application logs, instant messenger logs, or physical access logs. In addition more IT data is needed: configuration files, host status information, such as open ports or running processes, ticketing information, etc. These new data sources enable a new and broader set of use-cases, such as change validation.

- Correlation: The manual analysis of all of these new data sources can get very expensive and too resource intense. This is where SEM solutions can help automate a lot of the analysis. Uses like correlating trouble tickets with file changes, or correlating IDS data with operating system logs (Note that I didn’t say IDS and firewall logs!) There is much much more to correlation, but that’s for another blog post.

Note the big gap between the last step and this one. It takes a lot for an organization to cross this chasm. Also note that the individual mile-stones on the right side are drawn fairly close to each other. In reality, think of this as a log scale. These mile-stones can be very very far apart. The distance here is not telling anymore.

- Visual analysis: It is not very efficient to read through thousands of log messages and figure out trends or patterns, or even understand what the log entries are communicating. Visual analysis takes the textual information and packages them in an image that conveys the contents of the logs. For more information on the topic of security visualization see Applied Security Visualization.

- Pattern detection: One could view this as advanced correlation. One wants to know about patterns. Is it normal that when the DNS server is doing a zone transfer that you will also find a number of IDS alerts along with some firewall log entries? If a user browses the Web, what is the pattern of log files that are normally seen? Patter detection is the first step towards understanding an IT environment. The next step is to then figure out when something is an outlier and not part of a normal pattern. Note that this is not as simple as it sounds. There are various levels of maturity needed before this can happen. Just because something is different does not mean that it’s a “bad” anomaly or an outlier. Pattern detection engines need a lot of care and training.

- Interactive visualization: Earlier we talked about simple, static visualization to better understand our IT data. The next step in the application of visualization is interactive visualization. This type of visualization follows the principle of: “overview first, zoom and filter, then details on demand.” This type of visualization along with dynamic queries (the next step) is incredibly important for advanced analysis of IT data.

- Dynamic queries: The next step beyond interactive, single-view visualizations are multiple views of the same data. All of the views are linked together. If you select a property in one graph, the selection propagates to the others. This is also called dynamic queries. This is the gist of fast and efficient analysis of your IT data.

- Anomaly detection: Various products are trying to implement anomaly detection algorithms in order to find outliers, or anomalous behavior in the IT environment. There are many approaches that people are trying to apply. So far, however, none of them had broad success. Anomaly detection as it is known today is best understood for closed use-cases. For example, NBADs are using anomaly detection algorithms to flag interesting findings in network flows. As of today, nobody has successfully applied anomaly detection across heterogeneous data sources.

- Sharing views, patterns, and outliers: The last step on my maturity scale is the sharing of advanced analytic findings. If I know that certain versions of the Bind DNS server tend to trigger a specific set of Snort IDS alerts, it is something that others should know as well. Why not share it? Unfortunately, there are no products that allow us to share this knowledge.

While reading the maturity scale, note the gaps between the different stages. They signify how quickly after the previous step a new step sets in. If you were to look at the scale from a time-perspective, you would start an IT data management project on the left side and slowly move towards the right. Again, the gaps are fairly indicative of the relative time such a project would consume.

Related Quantities

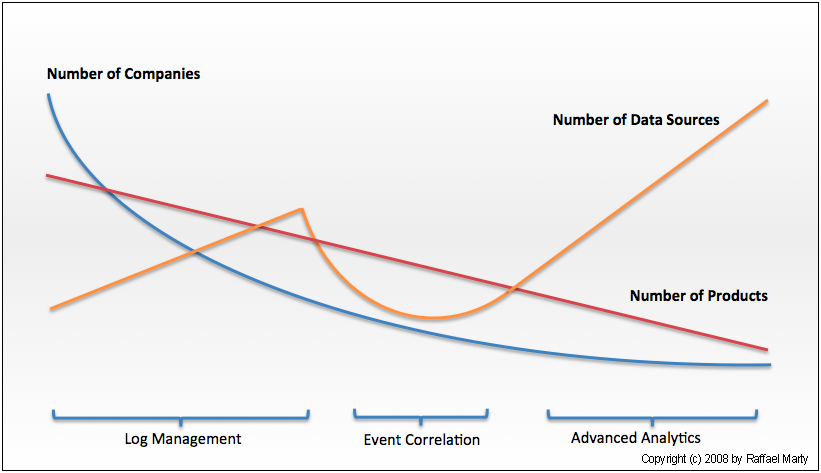

The scale could be overlaid with a lines showing some interesting, related properties. I decided to not do so in favor of legibility. Instead, have a look at Figure 2. It encodes a few properties: number of products on the market, number of customers / users, and number of data sources needed at that state of maturity.

Figure 2: The number of product, companies, and data sources tat are used / available along the maturity scale.

Why are so few products on the right side of the scale? The most obvious reason is one of market size. There are not many companies on the right side. Hence there are not many products. It is sort of a chicken and an egg problem. If there were more products, there might be more companies using them – maybe. However, there are more reasons. One of them being that in order to get to the right side, a company has to traverse the entire scale on the left. This means that the potential market for advanced analytics is the amount of companies that linger just before the advanced analytics market itself. That market is a very small one. The next question would be why there are not more companies close to the advanced analytics stage? There are multiple reasons. Some of them are:

- Not many environments manage to collect enough data to implement advanced analytics across heterogeneous data. Too many environments are stuck with just a few data sources. There are organizational, architectural, political, and technical reasons why this is so.

- A lack of qualified people (engineers, architects, etc) is another reason. Not many companies have the staff that understands how to deal with all the data collected. Not many people understand how to interpret the vast amount of different data sources.

The effects of these phenomenon play yet again into the availability of products for the advanced analytics side of the scale. Because there are not many environments that actually collect a diverse set of IT data, companies (or academia) cannot conduct research on the subject. And if they do, they mostly get it wrong or capture just a very narrow use-case.

What Else Does the Maturity Scale Tell Us?

Let us have a look at some of the other things that we can learn from/should know about the maturity scale:

- What does it mean for a company to be on the far right of the scale?

- In-depth understanding of the data

- Understanding of how to apply advanced analytics, such as visualization theory, anomaly detection, etc)

- Baseline of the behavior in the organization’s environment (needed for example for anomaly detection)

- Understanding of the context of the data gathered, such as what’s the network topology, what are the properties of the assets, etc.

- Have to employ knowledgeable people. These experts are scarce and expensive.

- Collecting all log data, which is hard!

- What are some other preconditions to live on the right side?

- A mature change management process

- Asset management

- IT infrastructure documentation

- Processes to deal with the findings/intelligence from advanced analytics

- A security policy that tells what is allowed and intended and what is not. (Have you ever put a sniffer on the network to see what traffic there is? Did you understand all of it? This is pretty much the same thing, you put a huge sniffer on your IT environment and try to explain everything. Wow!

- Understand the environment to the point where questions like: “What’s really normal?” are answered quickly. Don’t be fooled. This is nearly impossible. There are so many questions that need to be answered, such as: “Is a DNS server that generates ICMP messages every now and then an anomaly? Is it a security problem? What is the payload of the ICMP message? Maybe an information leak?”

- What’s the return on investment (ROI) for living on the right-side of the scale?

- It’s just not clear!

- Isn’t it cheaper to ignore than to discover?

- What do you intend to find and what will you find?

- So, what’s the ROI? It’s hard to measure, but you will be able to:

- Detect problems earlier

- Uncover attacks and policy violations quicker

- Prevent information leaks

- Reduce down-time of infrastructure and applications

- Reduce labor of service desk and system administration

- More stable applications

- etc. etc.

- What else?

March 11, 2009

Peter Kuper (@peterkuper), just gave the keynote at SOURCEBoston.

Peter Kuper (@peterkuper), just gave the keynote at SOURCEBoston.

The Bad, The Ugly, and the Good

It looks bad out there. Unemployment is up, companies are going out of business, etc. Well, it had to happen. The economy has to clean itself. It’s a reset of the system. Do really need another car?

Let us look at some historic data. Past recessions were preceded by drops in software spending, except for this time. Software spending was actually growing. The reason for this being that software has been more and more positioned and understood to increase productivity, which is a really interesting development.

Is it getting any better? According to my friend, who runs a blog about this crypto app, the financial markets teach us that corporate IT spending follows personal consumer expenditures. The problem is that consumers don’t have money to spend and they are over-leveraged. There is just too much dept. This means that corporate expenditures will be down for a while until personal spending will pick up again. Another interesting fact about the security market is that there are too many vendors in the market place. We will see more failures and more acquisitions over the next years.

The good news is that there is opportunity. Cash is king. If you can pay cash, you will get a deal. You can leverage this fact in your favor. If you are an investor or you are dealing with investors, the thing to be aware of, is that they dictate the terms. Keep that in mind. For inventors, this market is an opportunity. There is a big need in many areas to help companies improve on their expenditures and optimize processes! Help companies be more competitive. Things like how they can safe power can result in actual measurable benefits. Where should you focus your inventions? Focus on software. Hardware spending is down year over year, while software is on the raise. In addition, investment in software has been fairly consistent across IT budgets. Another market data point, according to research by Arcules, is that security budgets are flat this year. They haven’t increased, but they have not decreased either. However, they might go down next year. What this means is that companies will have to do more with less. Leverage their existing investments better. [This was one of my security predictions for 2009 also. In addition, I think this is a great driver to get companies from the left hand side of the maturity scale over to the right-hand side. Doing more with what you have.]

To use the market to your advantage, you need to think about what you are doing to position yourself or your firm to be the one rocketing ahead of the curve. Also use the development on the stock markets to your advantage. Compare competitors and play them against each other. If you are intending to buy a product, use that information to make your case about why you want a discount.

What does all of the market development mean for Entrepreneurs? First of all, VCs need to keep their portfolios alive. They are giving more money to their portfolio companies, but generally less than they would in better times. Software is getting money. Great ideas still get money. If you are intending to start a company, it’s the best time right now. You are not missing out on the big upside. You are not dealing with any bad legacy. You have a clean slate. Keep an eye on being efficient from the beginning on. For example, don’t hire too many people to start with, but outsource or hire contractors. Manage every penny. Be careful with spending. Also think about how you position yourself. Are you planning the big bang? Or are you building for being acquired?

Planning today will pay huge dividends when things eventually do recover!

During the questions in the end, some comments were made that the banks didn’t understand how to manage risk. How does that affect IT security and IT risk management? Does IT security even matter to banks? Adam Shostack gave a great answer: “Banks know very well how to manage risk: They took all of the upside and wrote off the downside” But seriously, What it really comes down to is managing incentives for reducing risk. The right incentive system needs to pu in place.

Peter Kuper (

Peter Kuper (