September 17, 2014

A new version of AfterGlow is ready. Version 1.6.5 has a couple of improvements:

1. If you have an input file which only has two columns, AfterGlow now automatically switches to a two-node mode. You don’t have to use the (-t) switch explicitly anymore in this case! (I know, it’s about time I added this)

2. Very minor change, but something that kept annoying me over time is the default edge length. It was set to 3 initially and now it’s reduced to 1.5, which makes fro a bit more compact graphs. You can still change this with the -e switch on the command line

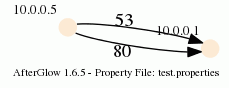

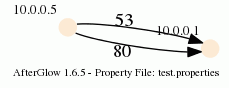

3. The major change is about adding edge label though. Here is a quick example:

label.edge=$fields[2]

This assumes that the third column of your data contains the label for the data. In the example below, the port numbers:

10.0.0.5,10.0.0.1,53

10.0.0.5,10.0.0.1,80

When you run afterglow, use the -t switch to have it render only two nodes, but given the configuration above, we are using the third column as the edge label. The output will look like this:

As you can see, we have twice the same edge defined in the data with two different labels (port 53 and 80). If you want to have the graph show both edges, you add the following configuration in the configuration file:

label.duplicate=1

Which then results in the following graph:

Note that the duplicating of edges only works with GDF files (-k). The edge labels work in DOT and GDF files, not in GraphSON output.

October 25, 2013

As I outlined in my previous blog post on How to clean up network traffic logs, I have been working with the VAST 2013 traffic logs. Today I am going to show you can load the traffic logs into Impala (with a parquet table) for very quick querying.

First off, Impala is a real-time search engine for Hadoop (i.e., Hive/HDFS). So, scalable, distributed, etc. In the following I am assuming that you have Impala installed already. If not, I recommend you use the Cloudera Manager to do so. It’s pretty straight forward.

First we have to load the data into Impala, which is a two step process. We are using external tables, meaning that the data will live in files on HDFS. What we have to do is getting the data into HDFS first and then loading it into Impala:

$ sudo su - hdfs

$ hdfs dfs -put /tmp/nf-chunk*.csv /user/hdfs/data

We first become the hdfs user, then copy all of the netflow files from the MiniChallenge into HDFS at /user/hdfs/data. Next up we connect to impala and create the database schema:

$ impala-shell

create external table if not exists logs (

TimeSeconds double,

parsedDate timestamp,

dateTimeStr string,

ipLayerProtocol int,

ipLayerProtocolCode string,

firstSeenSrcIp string,

firstSeenDestIp string,

firstSeenSrcPort int,

firstSeenDestPor int,

moreFragment int,

contFragment int,

durationSecond int,

firstSeenSrcPayloadByte bigint,

firstSeenDestPayloadByte bigint,

firstSeenSrcTotalByte bigint,

firstSeenDestTotalByte bigint,

firstSeenSrcPacketCoun int,

firstSeenDestPacketCoun int,

recordForceOut int)

row format delimited fields terminated by ',' lines terminated by '\n'

location '/user/hdfs/data/';

Now we have a table called ‘logs’ that contains all of our data. We told Impala that the data is comma separated and told it where the data files are. That’s already it. What I did on my installation is leveraging the columnar data format of Impala to speed queries up. A lot of analytic queries don’t really suit the row-oriented manner of databases. Columnar orientation is much more suited. Therefore we are creating a Parquet-based table:

create table pq_logs like logs stored as parquetfile;

insert overwrite table pq_logs select * from logs;

The second command is going to take a bit as it loads all the data into the new Parquet table. You can now issues queries against the pq_logs table and you will get the benefits of a columnar data store:

select distinct firstseendestpor from pq_logs where morefragment=1;

Have a look at my previous blog entry for some more queries against this data.

October 22, 2013

I have spent some significant time with the VAST 2013 Challenge. I have been part of the program committee for a couple of years now and have seen many challenge submissions. Both good and bad. What I noticed with most submissions is that they a) didn’t really understand network data, and b) they didn’t clean the data correctly. If you wanna follow along my analysis, the data is here: Week 1 – Network Flows (~500MB)

Also check the follow-on blog post on how to load data into a columnar data store in order to work with it.

Let me help with one quick comment. There is a lot of traffic in the data that seems to be involving port 0:

$ cat nf-chunk1-rev.csv | awk -F, '{if ($8==0) print $0}'

1364803648.013658,2013-04-01 08:07:28,20130401080728.013658,1,OTHER,172.10.0.6,

172.10.2.6,0,0,0,0,1,0,0,222,0,3,0,0

Just because it says port 0 in there doesn’t mean it’s port 0! Check out field 5, which says OTHER. That’s the transport protocol. It’s not TCP or UDP, so the port is meaningless. Most likely this is ICMP traffic!

On to another problem with the data. Some of the sources and destinations are turned around in the traffic. This happens with network flow collectors. Look at these two records:

1364803504.948029,2013-04-01 08:05:04,20130401080504.948029,6,TCP,172.30.1.11,

10.0.0.12,9130,80,0,0,0,176,409,454,633,5,4,0

1364807428.917824,2013-04-01 09:10:28,20130401091028.917824,6,TCP,172.10.0.4,

172.10.2.64,80,14545,0,0,0,7425,0,7865,0,8,0,0

The first one is totally legitimate. The source port is 9130, the destination 80. The second record, however, has the source and destination turned around. Port 14545 is not a valid destination port and the collector just turned the information around.

The challenge is on you now to find which records are inverted and then you have to flip them back around. Here is what I did in order to find the ones that were turned around (Note, I am only using the first week of data for MiniChallenge1!):

select firstseendestport, count(*) c from logs group by firstseendestport order

by c desc limit 20;

| 80 | 41229910 |

| 25 | 272563 |

| 0 | 119491 |

| 123 | 95669 |

| 1900 | 68970 |

| 3389 | 58153 |

| 138 | 6753 |

| 389 | 3672 |

| 137 | 2352 |

| 53 | 955 |

| 21 | 767 |

| 5355 | 311 |

| 49154 | 211 |

| 464 | 100 |

| 5722 | 98 |

...

What I am looking for here are the top destination ports. My theory being that most valid ports will show up quite a lot. This gave me a first candidate list of ports. I am looking for two things here. First, the frequency of the ports and second whether I recognize the ports as being valid. Based on the frequency I would put the ports down to port 3389 on my candidate list. But because all the following ones are well known ports, I will include them down to port 21. So the first list is:

80,25,0,123,1900,3389,138,389,137,53,21

I’ll drop 0 from this due to the comment earlier!

Next up, let’s see what the top source ports are that are showing up.

| firstseensrcport | c |

+------------------+---------+

| 80 | 1175195 |

| 62559 | 579953 |

| 62560 | 453727 |

| 51358 | 366650 |

| 51357 | 342682 |

| 45032 | 288301 |

| 62561 | 256368 |

| 45031 | 227789 |

| 51359 | 180029 |

| 45033 | 157071 |

| 0 | 119491 |

| 45034 | 117760 |

| 123 | 95622 |

| 1984 | 81528 |

| 25 | 19646 |

| 138 | 6711 |

| 137 | 2288 |

| 2024 | 929 |

| 2100 | 927 |

| 1753 | 926 |

See that? Port 80 is the top source port showing up. Definitely a sign of a source/destination confusion. There are a bunch of others from our previous candidate list showing up here as well. All records where we have to turn source and destination around. But likely we are still missing some ports here.

Well, let’s see what other source ports remain:

select firstseensrcport, count(*) c from pq_logs2 group by firstseensrcport

having firstseensrcport<1024 and firstseensrcport not in (0,123,138,137,80,25,53,21)

order by c desc limit 10

+------------------+--------+

| firstseensrcport | c |

+------------------+--------+

| 62559 | 579953 |

| 62560 | 453727 |

| 51358 | 366650 |

| 51357 | 342682 |

| 45032 | 288301 |

| 62561 | 256368 |

| 45031 | 227789 |

| 51359 | 180029 |

| 45033 | 157071 |

| 45034 | 117760 |

Looks pretty normal. Well. Sort of, but let’s not digress. But lets try to see if there are any ports below 1024 showing up. Indeed, there is port 20 that shows, totally legitimate destination port. Let’s check out the. Pulling out the destination ports for those show nice actual source ports:

+------------------+------------------+---+

| firstseensrcport | firstseendestport| c |

+------------------+------------------+---+

| 20 | 3100 | 1 |

| 20 | 8408 | 1 |

| 20 | 3098 | 1 |

| 20 | 10129 | 1 |

| 20 | 20677 | 1 |

| 20 | 27362 | 1 |

| 20 | 3548 | 1 |

| 20 | 21396 | 1 |

| 20 | 10118 | 1 |

| 20 | 8407 | 1 |

+------------------+------------------+---+

Adding port 20 to our candidate list. Now what? Let’s see what happens if we look at the top ‘connections’:

select firstseensrcport,

firstseendestport, count(*) c from pq_logs2 group by firstseensrcport,

firstseendestport having firstseensrcport not in (0,123,138,137,80,25,53,21,20,1900,3389,389)

and firstseendestport not in (0,123,138,137,80,25,53,21,20,3389,1900,389)

order by c desc limit 10

+------------------+------------------+----+

| firstseensrcport | firstseendestpor | c |

+------------------+------------------+----+

| 1984 | 4244 | 11 |

| 1984 | 3198 | 11 |

| 1984 | 4232 | 11 |

| 1984 | 4276 | 11 |

| 1984 | 3212 | 11 |

| 1984 | 4247 | 11 |

| 1984 | 3391 | 11 |

| 1984 | 4233 | 11 |

| 1984 | 3357 | 11 |

| 1984 | 4252 | 11 |

+------------------+------------------+----+

Interesting. Looking through the data where the source port is actually 1984, we can see that a lot of the destination ports are showing increasing numbers. For example:

| 1984 | 2228 | 172.10.0.6 | 172.10.1.118 |

| 1984 | 2226 | 172.10.0.6 | 172.10.1.147 |

| 1984 | 2225 | 172.10.0.6 | 172.10.1.141 |

| 1984 | 2224 | 172.10.0.6 | 172.10.1.115 |

| 1984 | 2223 | 172.10.0.6 | 172.10.1.120 |

| 1984 | 2222 | 172.10.0.6 | 172.10.1.121 |

| 1984 | 2221 | 172.10.0.6 | 172.10.1.135 |

| 1984 | 2220 | 172.10.0.6 | 172.10.1.126 |

| 1984 | 2219 | 172.10.0.6 | 172.10.1.192 |

| 1984 | 2217 | 172.10.0.6 | 172.10.1.141 |

| 1984 | 2216 | 172.10.0.6 | 172.10.1.173 |

| 1984 | 2215 | 172.10.0.6 | 172.10.1.116 |

| 1984 | 2214 | 172.10.0.6 | 172.10.1.120 |

| 1984 | 2213 | 172.10.0.6 | 172.10.1.115 |

| 1984 | 2212 | 172.10.0.6 | 172.10.1.126 |

| 1984 | 2211 | 172.10.0.6 | 172.10.1.121 |

| 1984 | 2210 | 172.10.0.6 | 172.10.1.172 |

| 1984 | 2209 | 172.10.0.6 | 172.10.1.119 |

| 1984 | 2208 | 172.10.0.6 | 172.10.1.173 |

That would hint at this guy being actually a destination port. You can also query for all the records that have the destination port set to 1984, which will show that a lot of the source ports in those connections are definitely source ports, another hint that we should add 1984 to our list of actual ports. Continuing our journey, I found something interesting. I was looking for all connections that don’t have a source or destination port in our candidate list and sorted by the number of occurrences:

+------------------+------------------+---+

| firstseensrcport | firstseendestport| c |

+------------------+------------------+---+

| 62559 | 37321 | 9 |

| 62559 | 36242 | 9 |

| 62559 | 19825 | 9 |

| 62559 | 10468 | 9 |

| 62559 | 34395 | 9 |

| 62559 | 62556 | 9 |

| 62559 | 9005 | 9 |

| 62559 | 59399 | 9 |

| 62559 | 7067 | 9 |

| 62559 | 13503 | 9 |

| 62559 | 30151 | 9 |

| 62559 | 23267 | 9 |

| 62559 | 56184 | 9 |

| 62559 | 58318 | 9 |

| 62559 | 4178 | 9 |

| 62559 | 65429 | 9 |

| 62559 | 32270 | 9 |

| 62559 | 18104 | 9 |

| 62559 | 16246 | 9 |

| 62559 | 33454 | 9 |

This is strange in so far as this source port seems to connect to totally random ports, but not making any sense. Is this another legitimate destination port? I am not sure. It’s way too high and I don’t want to put it on our list. Open question. No idea at this point. Anyone?

Moving on without this 62559, we see the same behavior for 62560 and then 51357 and 51358, as well as 45031, 45032, 45033. And it keeps going like that. Let’s see what the machines are involved in this traffic. Sorry, not the nicest SQL, but it works:

.

select firstseensrcip, firstseendestip, count(*) c

from pq_logs2 group by firstseensrcip, firstseendestip,firstseensrcport

having firstseensrcport in (62559, 62561, 62560, 51357, 51358)

order by c desc limit 10

+----------------+-----------------+-------+

| firstseensrcip | firstseendestip | c |

+----------------+-----------------+-------+

| 10.9.81.5 | 172.10.0.40 | 65534 |

| 10.9.81.5 | 172.10.0.4 | 65292 |

| 10.9.81.5 | 172.10.0.4 | 65272 |

| 10.9.81.5 | 172.10.0.4 | 65180 |

| 10.9.81.5 | 172.10.0.5 | 65140 |

| 10.9.81.5 | 172.10.0.9 | 65133 |

| 10.9.81.5 | 172.20.0.6 | 65127 |

| 10.9.81.5 | 172.10.0.5 | 65124 |

| 10.9.81.5 | 172.10.0.9 | 65117 |

| 10.9.81.5 | 172.20.0.6 | 65099 |

+----------------+-----------------+-------+

Here we have it. Probably an attacker :). This guy is doing not so nice things. We should exclude this IP for our analysis of ports. This guy is just all over.

Now, we continue along similar lines and find what machines are using port 45034, 45034, 45035:

select firstseensrcip, firstseendestip, count(*) c

from pq_logs2 group by firstseensrcip, firstseendestip,firstseensrcport

having firstseensrcport in (45035, 45034, 45033) order by c desc limit 10

+----------------+-----------------+-------+

| firstseensrcip | firstseendestip | c |

+----------------+-----------------+-------+

| 10.10.11.15 | 172.20.0.15 | 61337 |

| 10.10.11.15 | 172.20.0.3 | 55772 |

| 10.10.11.15 | 172.20.0.3 | 53820 |

| 10.10.11.15 | 172.20.0.2 | 51382 |

| 10.10.11.15 | 172.20.0.15 | 51224 |

| 10.15.7.85 | 172.20.0.15 | 148 |

| 10.15.7.85 | 172.20.0.15 | 148 |

| 10.15.7.85 | 172.20.0.15 | 148 |

| 10.7.6.3 | 172.30.0.4 | 30 |

| 10.7.6.3 | 172.30.0.4 | 30 |

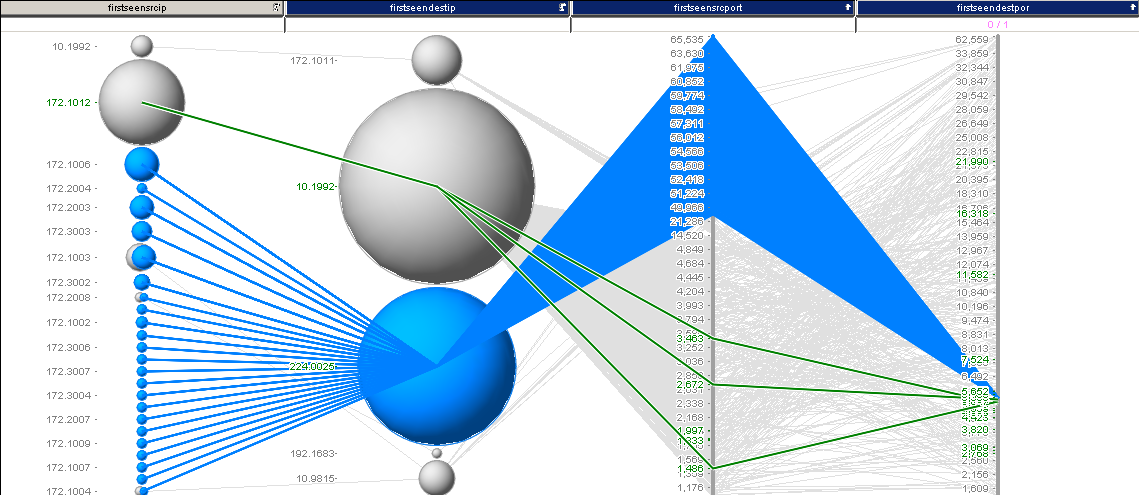

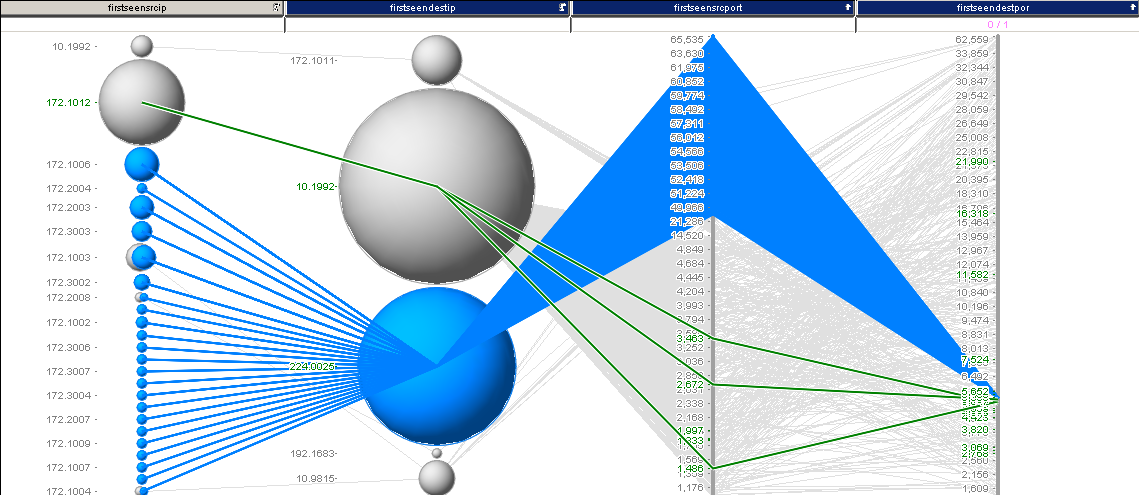

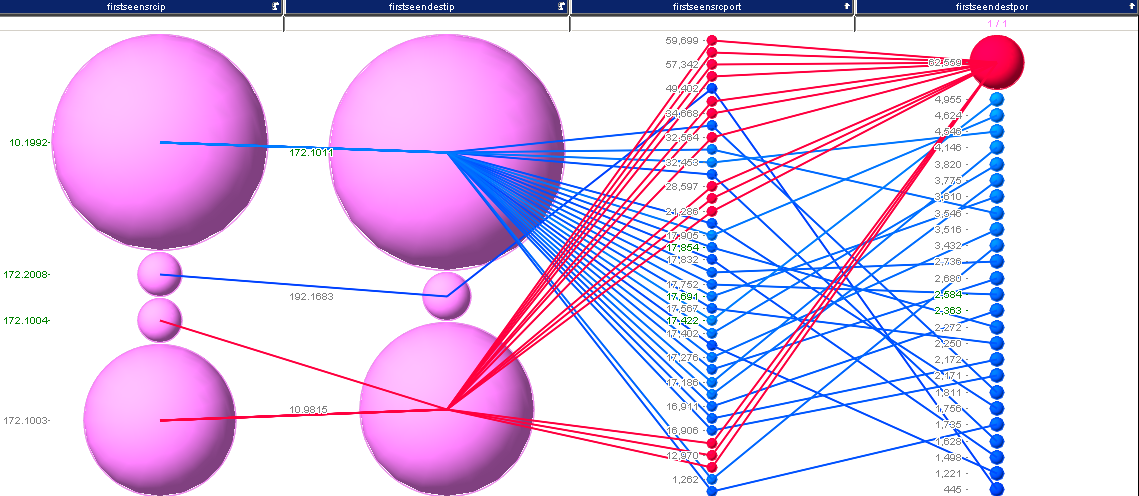

We see one dominant IP here. Probably another ‘attacker’. So we exclude that and see what we are left with. Now, this is getting tedious. Let’s just visualize some of the output to see what’s going on. Much quicker! And we only have 36970 records unaccounted for.

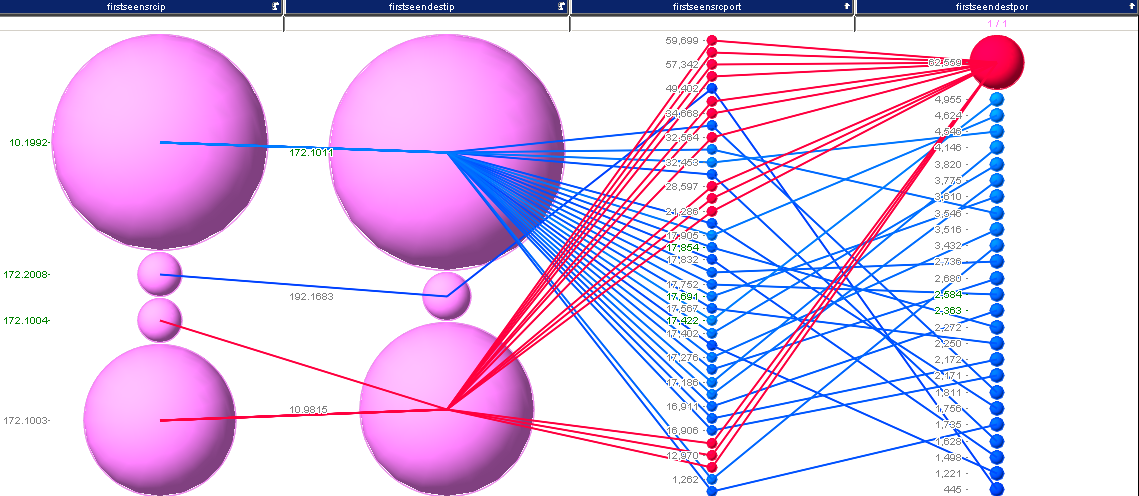

What you can see is the remainder of traffic. Very quickly we see that there is one dominant IP address. We are going to filter that one out. Then we are left with this:

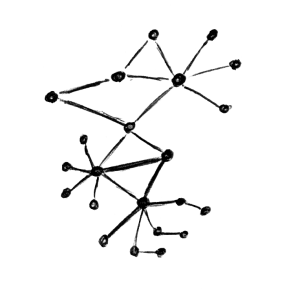

I selected some interesting traffic here. Turns out, we just found another destination port: 5535 for our list. I continued this analysis and ended up with something like 38 records, which are shown in the last image:

I’ll leave it at this for now. I think that’s a pretty good set of ports:

20,21,25,53,80,123,137,138,389,1900,1984,3389,5355

Oh well, if you want to fix your traffic now and turn around the wrong source/destination pairs, here is a hack in perl:

$ cat nf*.csv | perl -F\,\ -ane 'BEGIN {@ports=(20,21,25,53,80,123,137,138,389,1900,1984,3389,5355);

%hash = map { $_ => 1 } @ports; $c=0} if ($hash{$F[7]} && $F[8}>1024)

{$c++; printf"%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s",

$F[0],$F[1],$F[2],$F[3],$F[4],$F[6],$F[5],$F[8],$F[7],$F[9],$F[10],$F[11],$F[13],$F[12],

$F[15],$F[14],$F[17],$F[16],$F[18]} else {print $_} END {print "count of revers $c\n";}'

We could have switched to visual analysis way earlier, which I did in my initial analysis, but for the blog I ended up going way further in SQL than I probably should have. The next blog post covers how to load all of the VAST data into a Hadoop / Impala setup.

July 11, 2013

This is a slide I built for my Visual Analytics Workshop at BlackHat this year. I tried to summarize all the SIEM and log management vendors out there. I am pretty sure I missed some players. What did I miss? I’ll try to add them before the training.

Enjoy!

Here is the list of vendors that are on the slide (in no particular order):

Log Management

- Tibco

- KeyW

- Tripwire

- Splunk

- Balabit

- Tier-3 Systems

SIEM

- HP

- Symantec

- Tenable

- Alienvault

- Solarwinds

- Attachmate

- eIQ

- EventTracker

- BlackStratus

- TrustWave

- LogRhythm

- ClickSecurity

- IBM

- McAfee

- NetIQ

- RSA

- Event Sentry

Logging as a Service

- SumoLogic

- Loggly

- PaperTrail

- Torch

- AlertLogic

- SplunkStorm

- logentries

- eGestalt

Update: With input from a couple of folks, I updated the slide a couple of times.

March 24, 2012

There are cases where you need fairly sophisticated logic to visualize data. Network graphs are a great way to help a viewer understand relationships in data. In my last blog post, I explained how to visualize network traffic. Today I am showing you how to extend your visualization with some more complicated configurations.

There are cases where you need fairly sophisticated logic to visualize data. Network graphs are a great way to help a viewer understand relationships in data. In my last blog post, I explained how to visualize network traffic. Today I am showing you how to extend your visualization with some more complicated configurations.

This blog post was inspired by an AfterGlow user who emailed me last week asking how he could keep a list of port numbers to drive the color in his graph. Here is the code snippet that I suggested he use:

variable=@ports=qw(22 80 53 110);

color="green" if (grep(/^\Q$fields[0]\E$/,@ports))

Put this in a configuration file and invoke AfterGlow with it:

perl afterglow.pl -c file.config | ...

What this does is color all nodes green if they are part of the list of ports (22, 80, 53, 110). I am using $fields[0] to reference the first column of data. You could also use the function fields() to reference any column in the data.

Another way to define the variable is by looking it up in a file. Here is an example:

variable=open(TOR,"tor.csv"); @tor=; close(TOR);

color="red" if (grep(/^\Q$fields[1]\E$/,@tor))

This time you put the list of items in a file and read it into an array. Remember, it’s just Perl code that you execute after the variable= statement. Anything goes!

I am curious what you will come up with. Post your experiments and questions on secviz.org!

Read more about how to use AfterGlow in security visualization.

March 21, 2012

Have you ever collected a packet capture and you needed to know what the collected traffic is about? Here is a quick tutorial on how to use AfterGlow to generate link graphs from your packet captures (PCAP).

I am sitting at the 2012 Honeynet Project Security Workshop. One of the trainers of a workshop tomorrow just approached me and asked me to help him visualize some PCAP files. I thought it might be useful for other people as well. So here is a quick tutorial.

Installation

To start with, make sure you have AfterGlow installed. This means you also need to install GraphViz on your machine!

First Visualization Attempt

The first attempt of visualizing tcpdump traffic is the following:

tcpdump -vttttnnelr file.pcap | parsers/tcpdump2csv.pl "sip dip" | perl graph/afterglow.pl -t | neato -Tgif -o test.gif

I am using the tcpdump2csv parser to deal with the source/destination confusion. The problem with this approach is that if your output format is slightly different to the regular expression used in the tcpdump2csv.pl script, the parsing will fail [In fact, this happened to us when we tried it here on someone else’s computer].

It is more elegant to use something like Argus to do this. They do a much better job at protocol parsing:

argus -r file.pcap -w - | ra -r - -nn -s saddr daddr -c, | perl graph/afterglow.pl -t | neato -Tgif -o test.gif

When you do this, make sure that you are using Argus 3.0 or newer. If you do not, ragator does not have the -c option!

From here you can go in all kinds of directions.

Using other data fields

argus -r file.pcap -w - | ra -r - -nn -s saddr daddr dport -c, | perl graph/afterglow.pl | neato -Tgif -o test.gif

Here I added the dport to the parameters. Also note that I had to remove the -t parameter from the afterglow command. This tells AfterGlow that there are not two, but three columns in the CSV file.

Or use this:

argus -r file.pcap -w - | ra -r - -nn -s daddr dport ttl -c, | perl graph/afterglow.pl | neato -Tgif -o test.gif

This uses the destination address, the destination port and the TTL to plot your graph. Pretty neat …

AfterGlow Properties

You can define your own property file to define the colors for the nodes, configure clustering, change the size of the nodes, etc.

argus -r file.pcap -w - | ra -r - -nn -s daddr dport ttl -c, | perl graph/afterglow.pl -c graph/color.properties | neato -Tgif -o test.gif

Here is an example config file that is not as straight forward as the default one that is included in the AfterGlow distribution:

color="white" if ($fields[2] =~ /foo/)

color="gray50"

size.target=$targetCount{$targetName};

size=0.5

maxnodesize=1

The config uses the number of times the target shows up as the size of the target node.

Comments / Examples / Questions?

Obviously comments and questions are more than welcome. Also make sure that you post your example graphs on secviz.org!

March 16, 2012

Big data doesn’t help us to create security intelligence! Big data is like your relational database. It’s a technology that helps us manage data. We still need the analytical intelligence on top of the storage and processing tier to make sense of everything. Visual analytics anyone?

Big data doesn’t help us to create security intelligence! Big data is like your relational database. It’s a technology that helps us manage data. We still need the analytical intelligence on top of the storage and processing tier to make sense of everything. Visual analytics anyone?

A couple of weeks ago I hung out around the RSA conference and walked the show floor. Hundreds of companies exhibited their products. The big topics this year? Big data and security intelligence. Seems like this was MY conference. Well, not so fast. Marketing does unfortunately not equal actual solutions. Here is an example out of the press. Unfortunately, these kinds of things shine the light on very specific things; in this case, the use of hadoop for security intelligence. What does that even mean? How does it work? People seem to not really care, but only hear the big words.

Here is a quick side-note or anecdote. After the big data panel, a friend of mine comes up to me and tells me that the audience asked the panel a question about how analytics played into the big data environment. The panel huddled, discussed, and said: “Ask Raffy about that“.

Back to the problem. I have been reading a bunch lately about SIEM being replaced or superseded by big data infrastructure. That’s completely and utterly stupid. These are not competing technologies. They are complementary. If anything, SIEM will be replaced by some other analytical capabilities that are leveraging big data infrastructures. Big data is like RDBMS. New analytical capabilities are like the SIEMs (correlation rules, parsed data, etc.) For example, using big data, who is going to write your parsers for you. SIEMs have spent a lot of time and resources on things like parsers, big data solutions will need to do the same! Yes, there are a couple of things that you can do with big data approaches and unparsed data. However, most discussions out there do not discuss those uses.

In the context of big data, people also talk about leveraging multiple data sources and new data sources. What’s the big deal? We have been talking about that for 6 years (or longer). Yes, we want video feeds, but how do you correlate a video with a firewall log? Well, you process the video and generate events from it. We have been doing that all along. Nothing new there.

What HAS changed is that we now have the means to store and process the data; any data. However, nobody really knows how to process it.

Let’s start focusing on analytics!

September 13, 2011

I just returned from Taipei where I was teaching log analysis and visualization classes for Trend Micro. Three classes a 20 students. I am surprised that my voice is still okay after all that talking. It’s probably all the tea I was drinking.

I just returned from Taipei where I was teaching log analysis and visualization classes for Trend Micro. Three classes a 20 students. I am surprised that my voice is still okay after all that talking. It’s probably all the tea I was drinking.

The class schedule looked as follows:

Day 1: Log Analysis

- data sources

- data analysis and visualization linux (davix)

- log management and siem overview

- application logging guidelines

- log data processing

- loggly introduction

- splunk introduction

- data analysis with splunk

Day 2: Visualization

- visualization theory

- data visualization tools an libraries

- perimeter threat use-cases

- host-based data analysis in splunk

- packet capture analysis in splunk

- loggly api overview

- visualization resources

The class was accompanied by a number of exercises that helped the students apply the theory we talked about. The exercises are partly pen and paper and partly hands-on data analysis of sample logs with the davix life CD.

The class was accompanied by a number of exercises that helped the students apply the theory we talked about. The exercises are partly pen and paper and partly hands-on data analysis of sample logs with the davix life CD.

I love Taipei, especially the food. I hope I’ll have a chance to visit again soon.

PS: If you are looking for a list of visualization resources, they got moved over to secviz.

September 8, 2011

Analyzing log files can be a very time consuming process and it doesn’t seem to get any easier. In the past 12 years I have been on both sides of the table. I have analyzed terabytes of logs and I have written a lot of code that generates logs. When I started writing Loggly’s middleware, I thought it was going to be really easy and fun to finally write the perfect application logs. Guess what, I was wrong. Although I have seen pretty much any log format out there, I had the hardest time coming up with a decent log format for ourselves. What’s a good log format anyways? The short answer is: “One that enables analytics or actions.”

Analyzing log files can be a very time consuming process and it doesn’t seem to get any easier. In the past 12 years I have been on both sides of the table. I have analyzed terabytes of logs and I have written a lot of code that generates logs. When I started writing Loggly’s middleware, I thought it was going to be really easy and fun to finally write the perfect application logs. Guess what, I was wrong. Although I have seen pretty much any log format out there, I had the hardest time coming up with a decent log format for ourselves. What’s a good log format anyways? The short answer is: “One that enables analytics or actions.”

I was sufficiently motivated to come up with a good log format that I decided to write a paper about application logging guidelines. The paper has two main parts: Logging Guidelines and a reference architecture for a cloud service. In the first part I am covering the questions of when to log, what to log, and how to log. It’s not as easy as you might think. The most important thing to constantly keep in mind is the use of the logs. Especially for the question on what to log you need to keep the log consumer in mind. Are the logs consumed by a human? Are they consumed by a log management tool? What are the people looking at the logs trying to do? Debugging the application? Monitoring performance? Detecting security violations? Depending on the answers to these questions, you might change the places in your code that you emit log records. (Or even better you log in all places and add a use-case indicator as a field to your logs.)

The paper is a starting point and not a definite guide. I would expect readers to challenge it and come up with improvements and refinements of use-cases and also the exact contents of the log records. I’d love to hear from practitioners and get a dialog going.

As a side note: CEE, the Common Event Expression standard, covers parts of what I am talking about in the paper. However, the paper’s focus is mainly on defining guidelines for application developers; establishing a baseline of when log entries should be recorded and what information should be included.

Resources: Cloud Application Logging for Forensics – Paper – Presentation

February 14, 2011

I wanted to post this review of the ‘draft-cloud-log-00‘ for a while now. Here it finally goes. In short, there is no need for a cloud-logging standard, but a way to deal with virtualization use-cases, ideally as part of another logging standard, such as CEE.

The cloud-log-00 draft is meant to define a standard around a logging format that can be used to correlate messages generated on different physical or virtual machines but belonging to the same ‘user request’. The main contribution of the current draft proposal is that it adds a structured element to a syslog (RFC 5424) messages. It outlines a number of IDs that can be and should be used for this purpose.

This analysis of the proposed draft outlines a number of significant shortcomings of the current draft-cloud-log-00 and motivates why it is a bad idea to pursue this or any other cloud logging standard any further. I urge the working group and IEFT to not move forward with this draft, but join forces with other standards, such as CEE (cee.mitre.org) and make sure that any special requirements or use-cases can be handled with such.

Following is a more detailed analysis of the draft proposal. I am starting with a generic analysis of the necessity for such a standard and how this draft positions itself:

- Section 3.2 outlines the motivation and objective for the proposed standard. The section outlines the problem of attributing ‘user requests’ to physical machine instances. This is not a problem that is unique to cloud installations. It’s a problem that was introduced through virtualization. The section misses to mention a real challenge and use-case for defining a cloud-based logging standard.

- The motivation, if loosely interpreted, talks about operational and security challenges because of a lack of information in the logs, which leads to problems of attribution (see last paragraph). The section fails to identify supporting use-cases that link the draft and proposed solution to the security and operational challenges. More detail is definitely needed here. The draft suggest the introduction of user IDs to (presumably) solve this problem. What is the relationship between the two? [See below where I argue that something like a guest ID or a hypervisor ID is needed to identify the individual components]

- One more detail about section 3.2. It talks about how operating system (“Linux or Windows VMs”) log files will very likely be irrelevant since one cannot tie those logs to the physical entities. This is absolutely not true. Why would one need to be able to tie these logs to physical machines? If the virtual CPU runs at 100%, that is a problem. No need to relate that back to the physical hardware. It’s irrelevant. A discussion of layers (see below) would help a lot here and it would show that the stated problems are in fact non existent. Also, why would I need to know how many users (including their roles) [quote from the draft] share the same hardware? What does that matter? I can rely completely on my virtual instances and plan load accordingly!

- The proposal needs to differentiate different layers of information, which correlate with different layers where logs can be generated. There is the physical layer, the virtualization layer which is generally also called the hypervisor, then there is the guest operating system and then there are applications running inside of the guest operating system. The proposal does not mention any of these layers and does not outline how these layers interact. Especially with regards to sharing IDs across these layers, a discussion is needed. The layered model would also help to identify real problems and use-cases, which the draft fails to do.

- The proposal omits to define the ‘cloud’ completely, although it is used in the title of the draft. It is not clear whether SaaS, PaaS, or IaaS is the target of this draft. If all of the above, there should be a discussion of such, which includes how the information is shared in those environments (the IDs).

Following is a more detailed analysis and questions about the proposed approach by using various IDs to track requests:

- If an AID was useful, which the draft still has to motivate, how is that ID passed between different layers in the application stack? Who generates it? How does it help solve the initially stated problem of operational and security related visibility and accountability? What is being used today in many applications is the UNIQUE_ID that can be generated by a Web server when receiving the request (see Apache UNIQUE_ID). That value can then be passed around. However, operating system resources and log entries cannot be tied uniquely to an application request. OS resources are generally shared across applications and it is not possible to attribute them to a specific application, or request. The proposed approach of using an AID is not a solution for the initially stated problem.

- Section 3.1 outlines a generic problem statement for log management. Why is this important for this draft? There is no relationship to the rest of the draft. In addition, the section talks about routers, firewalls, network devices, applications, etc. How are you suggesting these devices share a common ID? There needs to be a protocol to exchange these IDs or you need a way to generate the IDs based on request attributes. I do not see any discussion of this in the draft. A router will definitely not include such an ID. The processing needed is way to expensive and would likely need application layer parsing to do so. Again, the problem statement needs rewriting and rethinking.

- What is the transit field (Section 4.2)? It is not motivated, nor discussed anywhere.

- In general, it seems like the proposed set of fields are a random collection of such. How do we know that there are not more important fields that are missing? And what guarantees that the existing fields are good candidates to solve the stated problem (again, the draft needs to outline a real problem it is trying to solve. What is stated in the current draft is not sufficient).

- The client entity (Section 4.2.1) is being defined as either an IP address or a FQDN. From a consumer’s perspective, this can be very troublesome. If in some cases a FQDN is logged and in others an IP, in order to correlate the two entities, a DNS lookup has to be performed. If this happens at the time of correlation and not at the time of log generation, the IP to FQDN mapping might have changed. This could result in a false correlation of two not related events!

I would like to point out that the ‘cloud’, be that SaaS, PaaS, or IaaS, does not require a new logging standard! We had multi-tier, as well as virtualized architectures for years and they are the real building blocks of the ‘cloud’. None of the cloud-specific attributes, like elasticity, utility-based payment, etc. require anything specific from a logging point of view. If anything, we need a logging standard that can help with virtualized and highly asynchronous, and distributed architectures. But these are not issues that a logging standard should have to deal with. It’s the infrastructure that has to make these trackers or IDs available. For a complete logging standard, have a look at CEE, where multiple different building blocks are being put in place to solve all kinds of well motivated problems associated with interchange of messages, which result in log records.

I urge to not move ahead with anything like a cloud-logging standard. The cloud is nothing special. Rather should CEE (cee.mitre.org) be leveraged and possibly extended to take into account virtualization use-cases. This draft has a lot of logical flaws, motivational shortcomings, and a lot of inconsistencies. What is needed is communication capabilities and standards that help extract and exchange information between the different layers in the application or cloud stack. The application should be able to get information on which guest it is running in (something like a guest ID) and the machine it runs on. That way, visibility is created. However, this has nothing to do with a logging standard!

There are cases where you need fairly sophisticated logic to visualize data. Network graphs are a great way to help a viewer understand relationships in data. In my last blog post, I explained how to

There are cases where you need fairly sophisticated logic to visualize data. Network graphs are a great way to help a viewer understand relationships in data. In my last blog post, I explained how to

Big data doesn’t help us to create security intelligence! Big data is like your relational database. It’s a technology that helps us manage data. We still need the analytical intelligence on top of the storage and processing tier to make sense of everything.

Big data doesn’t help us to create security intelligence! Big data is like your relational database. It’s a technology that helps us manage data. We still need the analytical intelligence on top of the storage and processing tier to make sense of everything.  I just returned from Taipei where I was teaching log analysis and visualization classes for Trend Micro. Three classes a 20 students. I am surprised that my voice is still okay after all that talking. It’s probably all the tea I was drinking.

I just returned from Taipei where I was teaching log analysis and visualization classes for Trend Micro. Three classes a 20 students. I am surprised that my voice is still okay after all that talking. It’s probably all the tea I was drinking. The class was accompanied by a number of exercises that helped the students apply the theory we talked about. The exercises are partly pen and paper and partly hands-on data analysis of sample logs with the

The class was accompanied by a number of exercises that helped the students apply the theory we talked about. The exercises are partly pen and paper and partly hands-on data analysis of sample logs with the  Analyzing log files can be a very time consuming process and it doesn’t seem to get any easier. In the past 12 years I have been on both sides of the table. I have analyzed terabytes of logs and I have written a lot of code that generates logs. When I started writing Loggly’s middleware, I thought it was going to be really easy and fun to finally write the perfect application logs. Guess what, I was wrong. Although I have seen pretty much any log format out there, I had the hardest time coming up with a decent log format for ourselves. What’s a good log format anyways? The short answer is: “One that enables analytics or actions.”

Analyzing log files can be a very time consuming process and it doesn’t seem to get any easier. In the past 12 years I have been on both sides of the table. I have analyzed terabytes of logs and I have written a lot of code that generates logs. When I started writing Loggly’s middleware, I thought it was going to be really easy and fun to finally write the perfect application logs. Guess what, I was wrong. Although I have seen pretty much any log format out there, I had the hardest time coming up with a decent log format for ourselves. What’s a good log format anyways? The short answer is: “One that enables analytics or actions.”