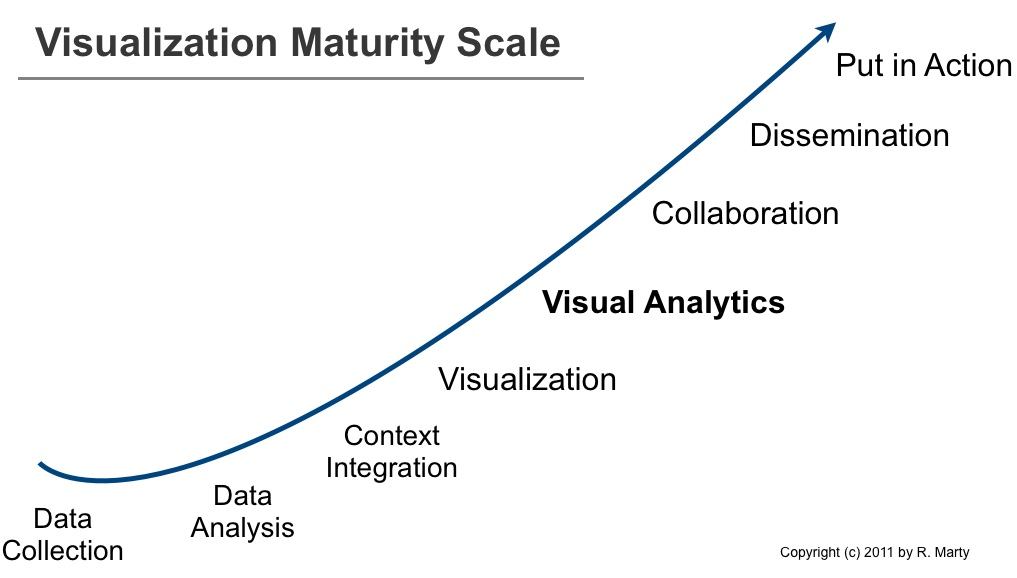

The visualization maturity scale can be used to explain a number of issues in the visual analytics space. For example, why aren’t companies leveraging visualization to analyze their data? What are the requirements to implement visual analytics services? Or why don’t we have more visual analytics products?

About three years ago I posted the log management maturity scale. The maturity scale helped explain why companies and products are not as advanced as they should be in the log management, log analysis, and security information management space.

While preparing my presentation for the cyber security grand challenge meeting in early December, I developed the maturity scale for information visualization that you can see above.

Companies that are implementing visualization processes move from through each of the steps from left to right. So do product companies that build visualization applications. In order to build products on the right-hand side, they need to support the pieces to the left. Let’s have a look at the different stages in more detail:

- Data Collection: No data, no visuals (see also Where Data Analytics and Security Collide). This is the foundation. Data needs to be available and accessible. Generally it is centralized in a big data store (it used to be relational databases and that’s a viable solution as well). This step generally involves parsing data. Turning unstructured data or semi-structured data into structured data. Although a fairly old problem, this is still a huge issue. I wonder if anyone is going to come up with a novel solution in this space anytime soon! The traditional regular expression based approach just doesn’t scale.

- Data Analysis: Once data is centralized or accessible via a federated data store, you have to do something with it. A lot of companies are using Excel to do the first iteration of data analysis. Some are using R, SAS, or other statistics and data analytics software. One of the core problems here is data cleansing. Another huge problem is understanding the data itself. Not every data set is as self explanatory as sales data.

- Context Integration: Often we collect data, analyze it, and then realize that the data doesn’t really contain enough information to understand it. For example in network security. What does the machine behind a specific IP address do? Is it a Web server? This is where we start adding more context: roles of machines, roles of users, etc. This can significantly increase the value of data analytics.

- Visualization: Lets be clear about what I refer to as visualization. I am using visualization to mean reporting and dashboards. Reports are static summaries of historical data. They help communicate information. Dashboards are used to communicate information in real-time (or near real-time) to create situational awareness.

- Visual Analytics: This is where things are getting interesting. Interactive interfaces are used as a means to understand and reason about the data. Often linked views, brushing, and dynamic queries are key technologies used to give the user the most freedom to look at and analyze the data.

- Collaboration: It is one thing to have one analyst look at data and apply his/her own knowledge to understand the data. It’s another thing to have people collaborate on data and use their joint ‘wisdom’.

- Dissemination: Once an analysis is done, the job of the analyst is not. The newly found insights have to be shared and communicated to other groups or people in order for them to take action based on the findings.

- Put in Action: This could be regarded as part of the dissemination step. This step is about operationalizing the information. In the case of security information management, this is where the knowledge is encoded in correlation rules to catch future instances of the same or similar incidents.

For an end user, the visualization maturity scale outlines the individual steps he/she has to go through in order to achieve analytical maturity. In order to implement the ‘put in action’ step, users need to implement all of the steps on the left of the scale.

For visualization product companies, the scale means that in order to have a product that lets a user put findings into action, they have to support all the left-hand stages: there needs to be a data collection piece; a data storage. The data needs to be pre-analyzed. Operations like data cleansing, aggregation, filtering, or even the calculation of certain statistical properties fall into this step. Context is not always necessary, but often adds to the usefulness of the data. Etc. etc.

There are a number of products, both open source, as well as commercial solutions that are solving a lot of the left hand side problems. Technologies like column-based data bases (e.g., MongoDB) or map reduce (e.g., Hadoop), or search engines like ElasticSearch are great open source examples of such technologies. In the commercial space you will find companies like Karmaspehre or DataMeer tackling these problems.

Comments? Chime in!

What about Objectivity/DB?

Comment by Patricia — January 22, 2012 @ 10:10 pm

You are asking why I am not mentioning Objectivity in the last paragraph on technologies? Well, this is definitely not a comprehensive list at all. There are many more technologies and companies. In fact, tons of them! Objectivity is an older player, has been around longer. I think what sometimes happens is that those players have a harder time marketing themselves in emerging markets that they have really been part of a while back already. I really don’t see / hear about them much at all. But that’s also because I am roaming more in the startup world.

Comment by Raffael Marty — January 22, 2012 @ 10:51 pm

This sound so interesting which visualization maturity scale can show progress in a company. Great post Raffael

Comment by Rooby G — January 25, 2012 @ 11:17 pm

Interesting post, though I would be careful about segmenting the different processes into discrete steps. In reality these processes are very iterative starting from Data Collection all the way up to collaboration. These 4 steps (excluding Visualization, because defined as a reporting dashboard it should be near collaboration or Dissemination, as that is what dashboards excel at) maintain a tight loop because when you visually analyze your data you often realize you need to go back to the cleaning or collection stage. I would actually argue visual analytics should be used for the data cleaning stage as dirty data can easily be revealed by interacting with different visualizations.

Comment by Kyle Melnick — February 10, 2012 @ 9:55 am

Kyle, thanks for your comment. I agree with you from a process standpoint. If you look at the information visualization process, you are spot on. Keep in mind though that what I am presenting here is not a visualization process. It’s a maturity scale. I think some of your comments might still apply, like the fact that dissemination could come before collaboration and Visual Analytics. Definitely debatable. Not sure how to show that on this scale though.

Comment by Raffael Marty — February 12, 2012 @ 5:54 pm

[…] Read the entire blog here. […]

Pingback by From Raffael Marty – The Steps To a Mature Visual Analytics Practice- LIGHTS — February 28, 2012 @ 6:24 pm

hello,

I have some questions about your visualization maturity scale:

1 – Why putting “data analysis” before “context integration” ?

2 – What is the difference between “data analysis” and “visualization” ?

I’m asking this because I think that the first thing to do after “data collection” is to set a context (meta-information) on it. However, “visualization” is an analysis of the contextualized data so I don’t understand the meaning of “data analysis” in the case of standalone step. Moreover, you defined “data analysis” by using tool/language like R, SAS, Wrangler but this, is what you called visualization.

Regards,

Comment by atome — April 4, 2013 @ 12:12 am

Thanks for the comment.

The reason for putting ‘data analysis’ before ‘context’ is that if you have data, you first look at it to understand roughly what it is about. You have to do some initial analysis to play with the data and get a high level fell for what the data is about. Only then can you decide what context or additional data you might need to augment your analysis. And often that context is not available, hard to get, or the analyst hasn’t even thought of it.

As to your second question or comment, visualization is often included as one of the disciplines of ‘data mining’. I used data analysis to identify the algorithms you run on the data. Anything from ‘simple’s statistics to clustering, dimensionality reduction, etc. The output of those algorithms can then be visualized to communicate easier with the human analyst.

Hope this clarifies

Comment by Raffael Marty — April 4, 2013 @ 9:20 am

Ok thx Marty.

Comment by atome — April 7, 2013 @ 12:03 am